What is Apache Spark Streaming?

Apache Spark Streaming is a component of Apache Spark for real-time data processing. It processes large data streams from sources like Apache Kafka®, Flume, or TCP sockets, and outputs data to file systems, databases, or dashboards.

Spark Streaming breaks down data streams into small batches for processing. This micro-batch architecture ensures high throughput and fault tolerance, making it suitable for processing continuous data flows.

This streaming framework provides features for scalable and flexible processing of unbounded datasets. It supports tasks like window operations, transformations, and joins in a real-time context. Additionally, Spark’s libraries for machine learning, graph computations, and SQL operations are fully accessible within Spark Streaming applications.

Key concepts in Spark Streaming

There are several key concepts that users must understand to build real-time processing applications with Spark Streaming:

- Discretized streams (DStreams): DStreams are the primary abstraction in Spark Streaming, representing continuous streams of data. Internally, a DStream is a sequence of resilient distributed datasets (RDDs), which are immutable collections of data partitioned across a cluster. DStreams can be created from various input sources, such as Apache Kafka, Flume, or Kinesis, or by applying operations on existing DStreams.

- Transformations and actions: Transformations are operations that create a new DStream from an existing one, such as

map,filter, andreduceByKey. These operations are lazily evaluated, meaning they are not executed until an action is called. Actions, likeprintorsaveAsTextFiles, trigger the execution of the transformations and produce output. - Windowed operations: Windowed operations allow the processing of data over sliding time windows, enabling computations like rolling averages or sums. For example, a window operation can compute the word count over the last 10 seconds of data, updating every 5 seconds.

- State management: Stateful operations maintain information across batches, allowing for computations that depend on previous data. This is useful for tasks like tracking session information or maintaining running counts. Spark Streaming provides mechanisms to manage state efficiently.

- Fault tolerance: Spark Streaming ensures fault tolerance through checkpointing and lineage information. Checkpointing involves saving the state of the streaming application to reliable storage, enabling recovery in case of failures. Lineage information allows the system to recompute lost data by tracking the sequence of operations that produced the data.

- Structured streaming: Structured Streaming is an evolution of Spark Streaming that provides a higher-level API for stream processing. It treats streaming data as a continuous table and allows the use of SQL queries and DataFrame operations. Structured Streaming offers end-to-end exactly-once guarantees and integrates with batch processing.

How Spark Streaming works

Here’s an overview of Apache Spark Streaming’s main functions.

Data sources and receivers

Spark Streaming interacts with various data sources using receivers. Receivers are special objects that capture data from input sources and store it as RDDs. These can include data from Apache Kafka, Flume, or custom socket streams. Receivers run as tasks within the Spark cluster, utilizing Spark’s distributed architecture to handle high-volume data rates. This design allows Spark Streaming to adapt to large-scale data ingestion needs promptly.

Transformations on DStreams

Transformations in Spark Streaming allow processing and manipulation of DStreams, adapting batch-level Spark operations to real-time data streams. These operations include common transformations like map, filter, reduce, and windowing, enabling compounding of processing logic across the stream data. By applying these transformations, Spark Streaming applications can execute complex analytics, providing immediate insights from incoming data.

Output operations

Output operations in Spark Streaming dictate how processed data is delivered at the end of transformations. These operations control where and how the results from streamed data computations are stored or sent for subsequent use. Some common output operations include saving results to databases, file systems, or pushing them to live dashboards.

Related content: Read our guide to architecture of Apache Spark

Tips from the expert

Andrew Mills

Senior Solution Architect

Andrew Mills is an industry leader with extensive experience in open source data solutions and a proven track record in integrating and managing Apache Kafka and other event-driven architectures

In my experience, here are tips that can help you better optimize and extend Apache Spark Streaming applications:

- Optimize micro-batch intervals with latency constraints in mind: Set micro-batch intervals based on your application’s latency tolerance. For low-latency needs, reduce the batch interval, but carefully balance it against the available processing capacity to avoid backpressure and instability.

- Use the Broadcast variable for static reference data: When processing streams that frequently reference static datasets, broadcast the static data to avoid reloading it for each batch. This optimizes lookups and prevents redundant data transfer across nodes, reducing latency in operations that join streaming data with reference datasets.

- Fine-tune Kafka and Spark integration for seamless processing: If using Kafka as a source, optimize the partition count in Kafka to align with Spark’s parallelism level. Increasing Kafka partitions and matching them with Spark’s parallelism can improve load balancing and resource utilization, leading to more efficient stream processing.

- Implement proactive monitoring with structured alerting: Go beyond basic Spark UI monitoring by setting up custom alerts on key metrics like processing time per batch, memory usage, and garbage collection frequency. Integrate with monitoring tools such as Prometheus or Grafana to capture long-term trends and quickly respond to performance degradation.

- Reduce data shuffling by minimizing transformations that involve wide dependencies: Shuffling is a major bottleneck in Spark Streaming. Avoid transformations like groupBy and reduceByKey whenever possible, or narrow their scope by applying them to pre-filtered datasets to minimize unnecessary data movement.

Best practices for using Spark Streaming

Here are some best practices to keep in mind when using Apache Spark Streaming.

Optimize data serialization

Optimizing serialization involves selecting efficient encoding methods to reduce data size and improve transfer speeds. Choosing between Kryo and Java serialization based on application requirements can improve performance. Proper serialization enhances data movement within Spark’s distributed environment, reducing communication overhead.

By minimizing data size and optimizing network transfers, serialization settings aid in reducing total processing time, especially in large-scale and high-frequency data streaming contexts. Optimization should include evaluating and fine-tuning serialization configurations based on workload characteristics.

Monitor and tune streaming applications

Monitoring tools built into Spark, like the Spark UI, offer insights into resource usage, job execution times, and system performance bottlenecks. Regularly examining these metrics can reveal potential inefficiencies or configuration issues that require adjustments to meet performance goals.

Tuning applications involves iterative adjustment of parameters like memory utilization, parallelism, and processing logic based on observational data. By strategically fine-tuning these elements, developers can achieve stable, reliable streaming performance. Consistent monitoring provides feedback loops for adapting applications to dynamic workloads.

Handle backpressure and latency

Backpressure occurs when incoming data exceeds processing capacity, leading to delays. Implementing backpressure mechanisms helps manage data flow, ensuring stability in high-load scenarios. Tuning resource allocation, adjusting batch, and receiver configurations reduce latency impacts.

Strategies to mitigate backpressure include dynamic resource scaling and employing load-balancing techniques. By aligning system resources with data input rates and processing constraints, Spark Streaming maintains performance consistency and timely data delivery.

Plan for scalability and resource allocation

Planning involves assessing resource needs based on anticipated data volumes and processing complexities, ensuring availability of computational and memory resources. Opting for elastic resource management strategies helps accommodate changing data loads, improving resilience to demand spikes.

Designing for scalability includes using partitioning strategies to distribute workload and refine resource utilization. Developing modular application architectures can further enhance scalability, allowing individual components to be optimized independently.

Use reliable data sources

Reliable sources reduce data loss risk and ensure consistent data availability. Integrating fault-tolerant systems, like Apache Kafka or HDFS, supports continuous data flow under adverse conditions. Stability from these sources supports consistent streaming outputs and operational dependability.

Maintaining data source reliability also involves verifying data integrity and employing redundancy mechanisms. Engaging in regular audits and validations minimizes impacts from corrupted or missing data streams, ensuring analytic accuracy.

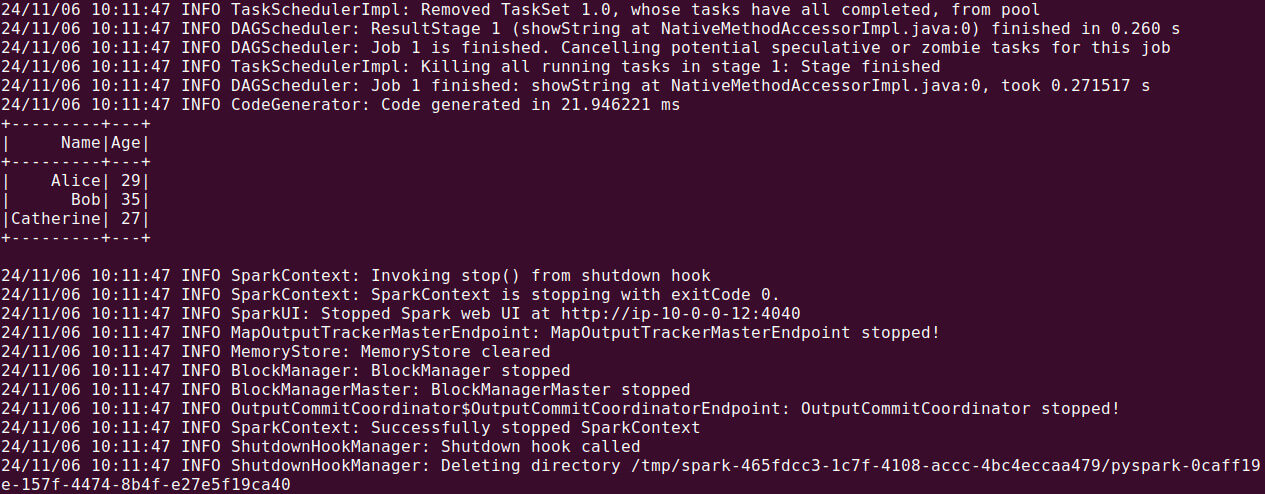

Quick tutorial: Apache Spark Streaming example

The following example demonstrates how to use Apache Spark’s Structured Streaming to maintain a running word count of text data from a TCP socket. This example shows how Spark Streaming can process real-time data and update results continuously. These instructions are adapted from the Spark documentation.

Step 1: Setting up the Spark session

The first step is to create a SparkSession, which is essential for initializing any Spark application:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

from pyspark.sql import SparkSession # Initialize Spark session spark = SparkSession \ .builder \ .appName("testApp") \ .getOrCreate() # Create a simple DataFrame data = [("Alice", 29), ("Bob", 35), ("Catherine", 27)] df = spark.createDataFrame(data, ["Name", "Age"]) # Show the DataFrame df.show() |

Here, SparkSession is set up with the application name “StructuredNetworkWordCount”. This session is the entry point for interacting with Spark’s core functionalities, including DataFrames and streaming.

Step 2: Setting up the data stream

We now define a streaming DataFrame that listens to a specified TCP socket on localhost port 9999, capturing each line of incoming text as a row in the DataFrame:

|

1 2 3 4 5 6 7 |

# Define a streaming DataFrame that reads from a socket lines = spark \ .readStream \ .format("socket") \ .option("host", "localhost") \ .option("port", 9999) \ .load() |

The readStream method initializes a stream, where each line of incoming data is stored in a column named value within the lines DataFrame. This setup establishes the input source for streaming data.

Step 3: Processing the stream for word count

To calculate word counts, we need to split each line into individual words and count each unique word occurrence. We achieve this with the split and explode functions:

|

1 2 3 4 5 6 7 8 |

from pyspark.sql.functions import explode, split # Split each line into words and explode into individual rows words = lines.select( explode( split(lines.value, " ") ).alias("word") ) |

In this code:

split(lines.value, " ")splits each line into a list of words.explode()transforms each word in the list into its own row in the DataFrame, creating a column called “word” for each word instance.

We can also group by these words and count occurrences:

|

1 2 |

# Count each unique word in the stream wordCounts = words.groupBy("word").count() |

This statement groups rows by unique words and aggregates their counts, resulting in a streaming DataFrame that continuously updates word counts as new data flows in.

Step 4: Defining output and starting the stream

Finally, we define an output operation to print updated word counts to the console and then start the streaming query:

|

1 2 3 4 5 6 7 8 |

# Set up the output query to print word counts query = wordCounts \ .writeStream \ .outputMode("complete") \ .format("console") \ .start() query.awaitTermination() |

In this setup:

outputMode("complete")specifies that the entire set of counts should be printed each time there is an update.start()begins the actual streaming computation.awaitTermination()keeps the application running to allow continuous streaming until explicitly stopped.

Running the example

To test this example, you need a simple utility like Netcat (nc) to send text data to the specified TCP socket:

- In one terminal, start Netcat to listen for incoming text on port

9999:1$ nc -lk 9999 - In a separate terminal, run the Spark application:

1$ ./bin/spark-submit structured_network_wordcount.py

- Any text entered in the Netcat terminal (e.g.,

apache spark) will be counted and printed in real-time in the Spark application terminal:

![tutorial screenshot]()

Related content: Read our guide to Apache Spark tutorial

Empowering Apache Spark through Instaclustr for Apache Kafka

For Apache Spark users, managing robust pipelines for real-time data processing can often feel daunting. That’s where Instaclustr’s Kafka experts step in to transform the game. By simplifying Apache Kafka’s complexities, Instaclustr delivers critical benefits that enable Apache Spark users to harness its full potential seamlessly.

Reliable data streaming made easy

Apache Kafka is renowned for its ability to handle high-throughput, fault-tolerant data streams, making it an essential tool for powering real-time applications in Spark. However, managing Kafka clusters effectively requires an in-depth understanding of its architecture and ongoing maintenance—tasks that can drain valuable resources.

Instaclustr eliminates this burden by offering fully managed Kafka services. With expert support and automated monitoring, Spark users can focus on building and optimizing their data pipelines instead of worrying about infrastructure upkeep.

Optimized performance with seamless integration

For Spark users leveraging Kafka’s pub/sub model to process real-time events, system reliability and low latency are vital. Instaclustr ensures a high-performance Kafka experience by providing optimized configurations, enterprise-grade SLAs, and automated scaling capabilities. This means faster data ingestion and reduced downtime, so Spark applications run efficiently without interruptions.

Enhanced data reliability and security

Spark users depend on accurate and secure data flows to build real-time analytics and machine learning models. Instaclustr’s expertise in Kafka management includes robust data replication mechanisms and security features such as end-to-end encryption, access controls, and compliance with industry standards. With these safeguards in place, Spark users can trust that their data remains reliable and protected.

Streamlined operations for innovation

By managing Kafka’s operational intricacies—including updates, patching, and constant monitoring—Instaclustr empowers Spark users to dedicate more resources to what truly matters: innovating and extracting value from their data. Whether it’s powering customer personalization models, detecting fraud in real-time, or enabling IoT solutions, Kafka’s seamless support through Instaclustr equips Spark users with the agility to scale their projects effortlessly.

With Instaclustr handling Kafka nuances behind the scenes, Apache Spark users gain peace of mind and unprecedented freedom to push boundaries in real-time data processing. By minimizing complexity and maximizing performance, Instaclustr becomes the trusted partner that takes Spark deployments to a whole new level.

For more information: