What is Cadence Workflow?

Cadence Workflow, also known as Cadence, is an open source orchestration engine for managing the execution of distributed processes. It ensures workflows execute as expected in the face of failures or interruptions. Cadence allows developers to model complex process logic using code, making workflows resilient and scalable.

This system decouples process semantics from the infrastructural concerns typically associated with distributed systems, providing a solution for workflow management. The architecture of Cadence Workflow is built around workflows and activities. Workflows define the overall business logic, while activities represent the individual tasks that make up the logic.

Cadence can maintain state across these executions, which provides fault tolerance. By leveraging features like timeouts and retries, Cadence ensures high availability and consistency for various processes without compromising on operational simplicity.

Key concepts of Cadence

When working with Cadence, it’s important to understand the following concepts.

Workflows

Workflows in Cadence represent the overarching business logic for processes, offering a stateful mechanism to execute operations. The state of a workflow, including variables, execution threads, and durable timers, is preserved even if failures occur at the process or service level. This is achieved through event sourcing, ensuring all workflow actions are logged and replayable in a deterministic manner.

Workflow code must adhere to strict guidelines, such as using Cadence APIs for operations like time handling and thread creation, to maintain this determinism. These workflows encapsulate state and logic while delegating any non-deterministic or external interactions to activities.

Activities

Activities are the building blocks of Cadence workflows, handling discrete, independent tasks. Unlike workflows, activities are not constrained by determinism, allowing them to perform external API calls, interact with databases, or carry out computationally intensive operations. They are executed by workers asynchronously, with task lists acting as queues to manage their execution.

When a workflow schedules an activity, the task list ensures that the activity is picked up by an available worker, allowing the system to scale based on workload. Activities also provide flexibility in handling failures, as retries and timeouts can be configured. Their stateless design makes them easy to manage, allowing tasks to be retried or reallocated to different workers.

Event handling

Event handling in Cadence allows workflows to interact with the outside world through signals. Signals are asynchronous messages sent to a specific workflow instance, enabling it to react to external triggers. These signals are processed in the order they are received, making them reliable for scenarios like event aggregation or state transitions.

For example, signals can notify workflows of changes in an IoT device’s state or update customer records in a loyalty program when a purchase is made. Workflows use signals to accumulate state or execute specific actions based on external inputs, such as sending notifications or invoking downstream services.

Synchronous query

Cadence workflows provide synchronous query functionality to expose their internal state to external systems. Queries are implemented as read-only callbacks that allow external clients to retrieve information about a workflow without modifying its state or interrupting its execution.

For example, a query could retrieve the current progress of a long-running workflow or the status of tasks. Queries must adhere to restrictions such as avoiding blocking code or activities to ensure they remain lightweight and non-disruptive. This feature is useful for monitoring, auditing, or generating real-time reports about workflow operations.

Task lists

Task lists act as a coordination layer between workflows and the workers executing their activities. When a workflow schedules an activity, the task list queues the activity until a worker is ready to process it. This decoupling ensures that workflows can continue their operations without waiting for worker availability.

Task lists also enable dynamic scaling by routing tasks to available workers, balancing load, and ensuring no single worker is overwhelmed. They are created on demand and require no explicit configuration. Additionally, task lists allow for secure and efficient communication since workers do not need to expose open ports or advertise themselves.

Archival

Archival addresses long-term data retention needs by moving workflow histories and visibility records to secondary storage after their retention period expires. This feature is essential for organizations that require compliance with regulatory standards or need to debug historical workflows. Archived data is stored in locations like S3, local filesystems, or other supported storage backends, specified through URI-based configurations.

The archival process is managed by the Archiver component, which is responsible for both storing and retrieving records. However, to access archived workflows, both the workflow ID and run ID are required. While archival is generally reliable, it operates on a best-effort basis, meaning that records may not be archived if the system deletes them prematurely.

Quick tutorial: Install Cadence service locally

To set up Cadence locally, you need to install its key components: the Cadence server, a domain for workflows, and the worker service. These instructions are adapted from the Cadence documentation.

Step 0: Install Docker

Cadence uses Docker to simplify deployment. If you don’t have Docker installed, follow the official Docker installation guide to set it up for your operating system.

Step 1: Run Cadence server using Docker Compose

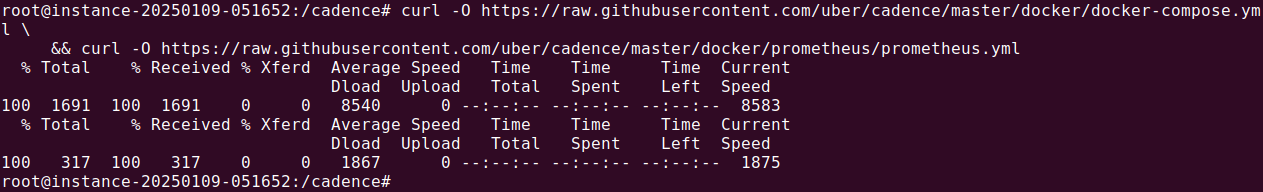

Download the Cadence docker-compose.yml and Prometheus configuration files. Open a terminal and run:

|

1 2 |

curl -O https://raw.githubusercontent.com/uber/cadence/master/docker/docker-compose.yml \ && curl -O https://raw.githubusercontent.com/uber/cadence/master/docker/prometheus/prometheus.yml |

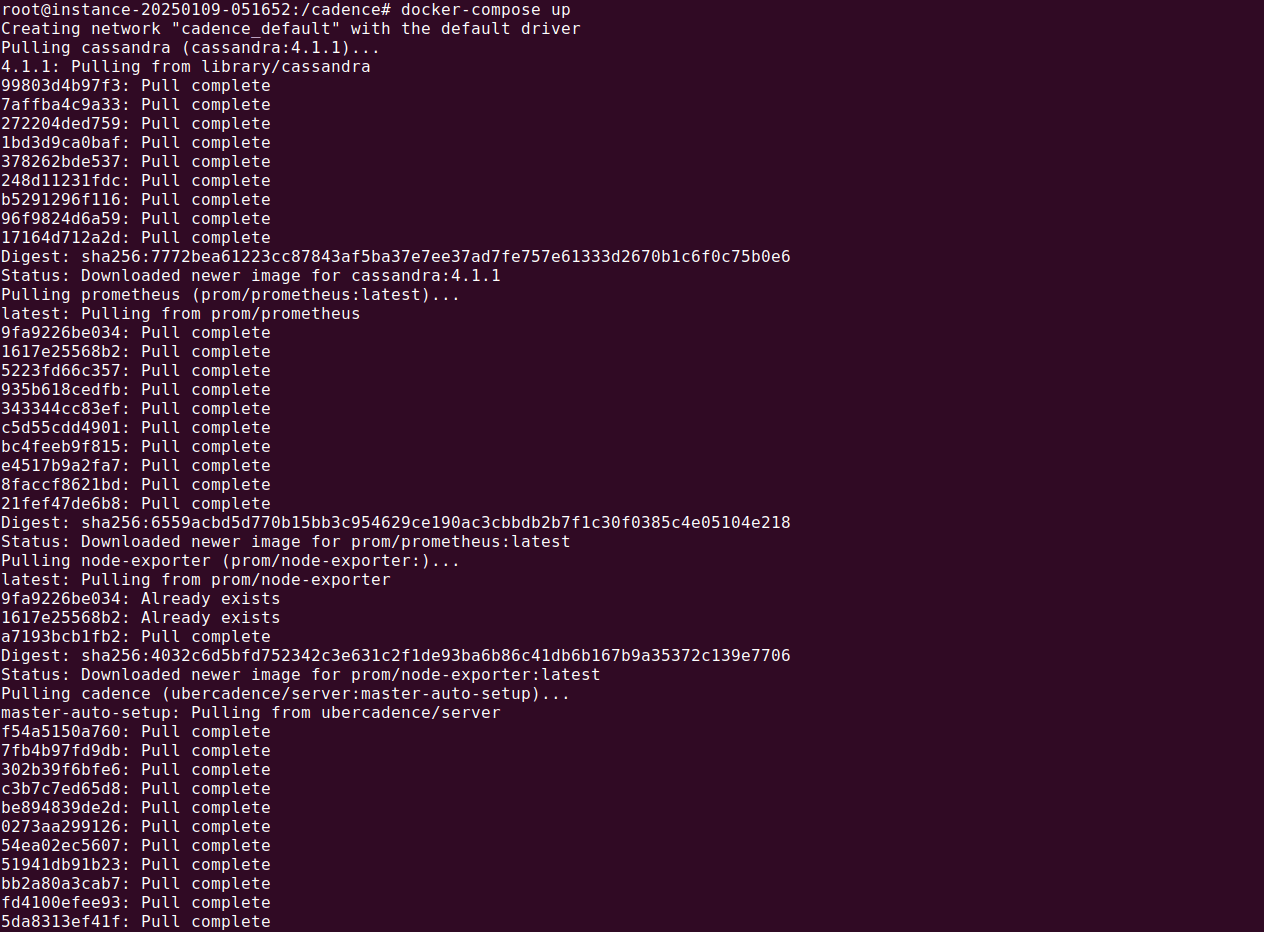

Next, start the Cadence server using Docker Compose:

|

1 |

docker-compose up |

This will spin up the Cadence server and its dependencies, such as Cassandra and Elasticsearch. Keep this terminal session running in the background, as it hosts the Cadence service.

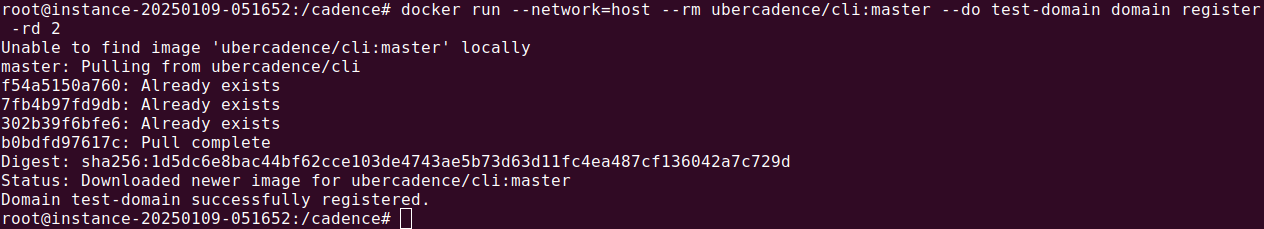

Step 2: Register a domain using the CLI

Domains in Cadence are namespaces used to organize workflows. To register a domain, open a new terminal and use the following command:

|

1 |

docker run --network=host --rm ubercadence/cli:master --do test-domain domain register -rd 2 |

Replace test-domain with the desired domain name. The -rd 2 option specifies a retention period of 2 days for workflow histories.

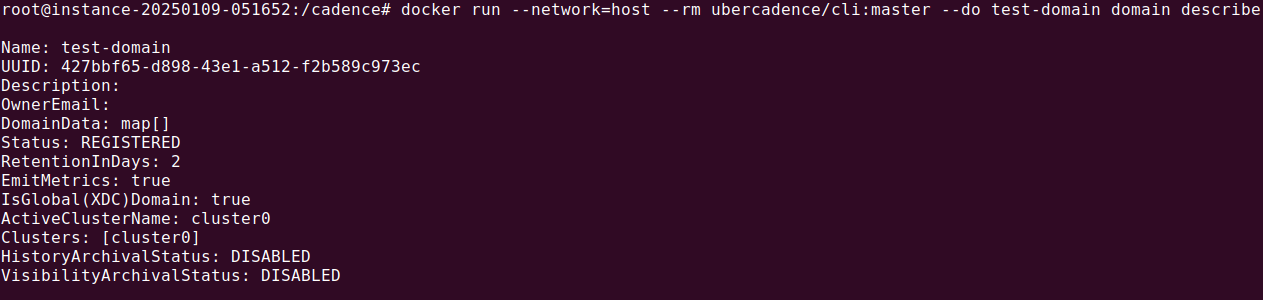

Verify the domain registration with this command:

|

1 |

docker run --network=host --rm ubercadence/cli:master --do test-domain domain describe |

You should see output confirming the domain details, including its status and retention period. For example:

|

1 2 3 4 |

Name: test-domain Status: REGISTERED RetentionInDays: 1 ArchivalStatus: DISABLED |

After completing these steps, you’ll have a functional Cadence server and a registered domain. You can now proceed to implement workflows and run worker services to execute activities within the domain.

Tips from the expert

Paul Brebner

Technology Evangelist at NetApp Instaclustr

Paul has extensive R&D and consulting experience in distributed systems, technology innovation, software architecture, and engineering, software performance and scalability, grid and cloud computing, and data analytics and machine learning.

In my experience, here are tips that can help you better implement and optimize workflows using Cadence Workflow:

- Focus on deterministic design: Ensure all workflow code adheres to Cadence’s determinism rules. Avoid using random generators, system clocks, or non-deterministic APIs directly in workflow code. Instead, use Cadence-provided APIs to simulate these operations for consistency and replayability.

- Leverage workflow versioning: For evolving workflows, use Cadence’s versioning APIs to introduce new logic incrementally. This avoids disrupting currently running workflows when updating workflow definitions.

- Design idempotent activities: Ensure activities are idempotent by handling retries gracefully. Use unique identifiers for operations like database writes or API calls to avoid duplication.

- Use task prioritization with task lists: Organize task lists by priority levels to ensure high-importance activities are executed promptly. Implement dynamic task routing if workflows require rebalancing workloads among workers.

- Optimize worker performance: Use resource-constrained workers for lightweight workflows and specialized workers for CPU-intensive activities. Monitor worker health and scale horizontally to meet high demands.

Notable Cadence Workflow alternatives

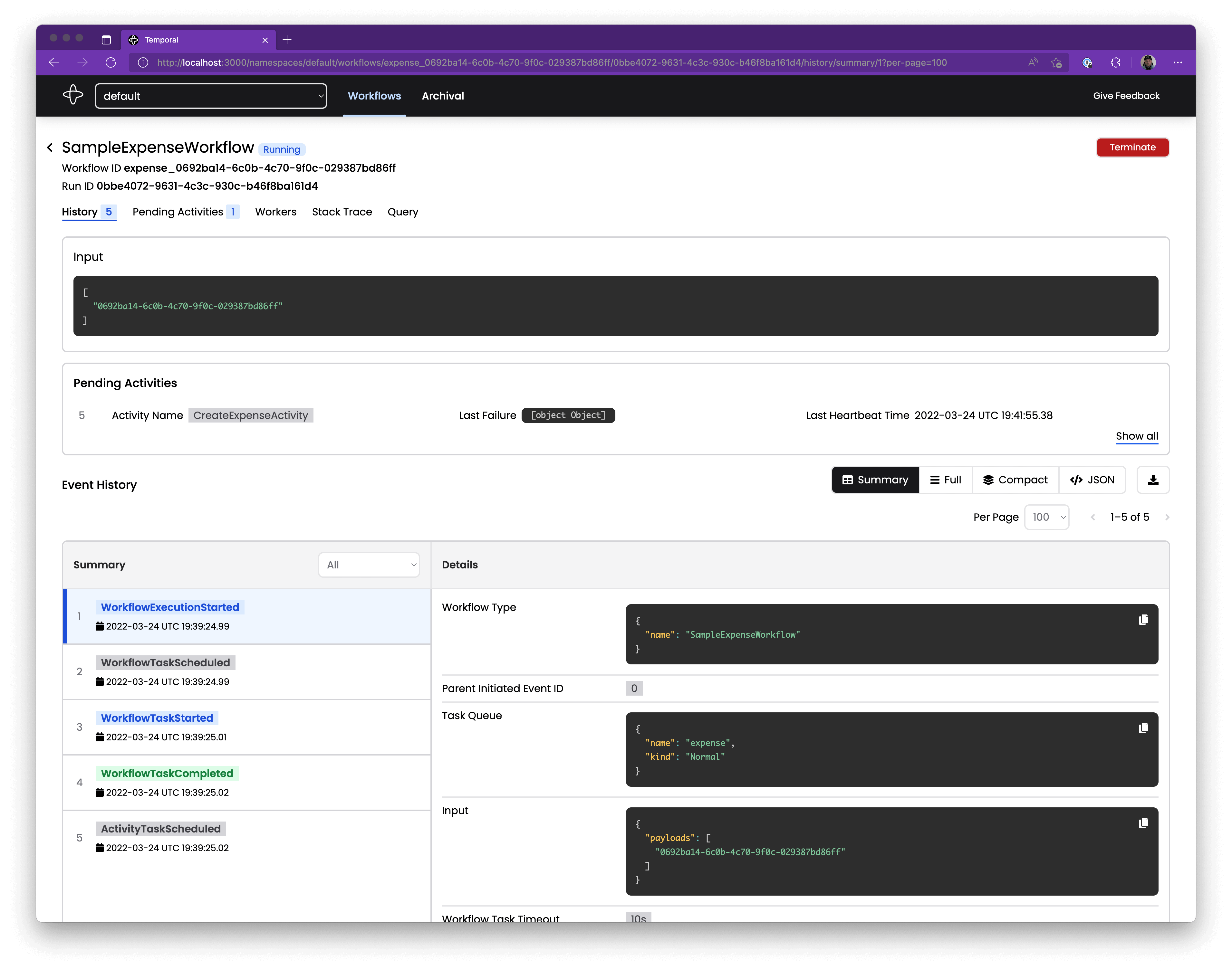

Temporal

Temporal is an open source platform that provides durable execution for applications. By enabling developers to write workflows as code using SDKs in their preferred languages, Temporal simplifies the creation of fault-tolerant and scalable applications. It separates workflows into workflows, which define the overall logic, and activities, which handle external interactions prone to failures.

License: MIT

Repository: https://github.com/temporalio/temporal

GitHub stars: 12K+

Contributors: 200+

Key features include:

- Code-defined workflows: Write workflows as code using Temporal’s SDKs, allowing developers to use familiar IDEs, tools, and libraries.

- Durable execution: Ensures business logic can be replayed, paused, or recovered from any arbitrary point.

- Activity management: Handles failure-prone tasks with automatic retries, long-running operations with heartbeats, and flexible routing.

- Infrastructure flexibility: Deploy Temporal on any infrastructure, from monoliths to microservices, with support for self-hosted or serverless Temporal Cloud.

- Task queue coordination: Uses task queues for efficient orchestration between workflows and workers, enabling scalable execution.

Source: Temporal

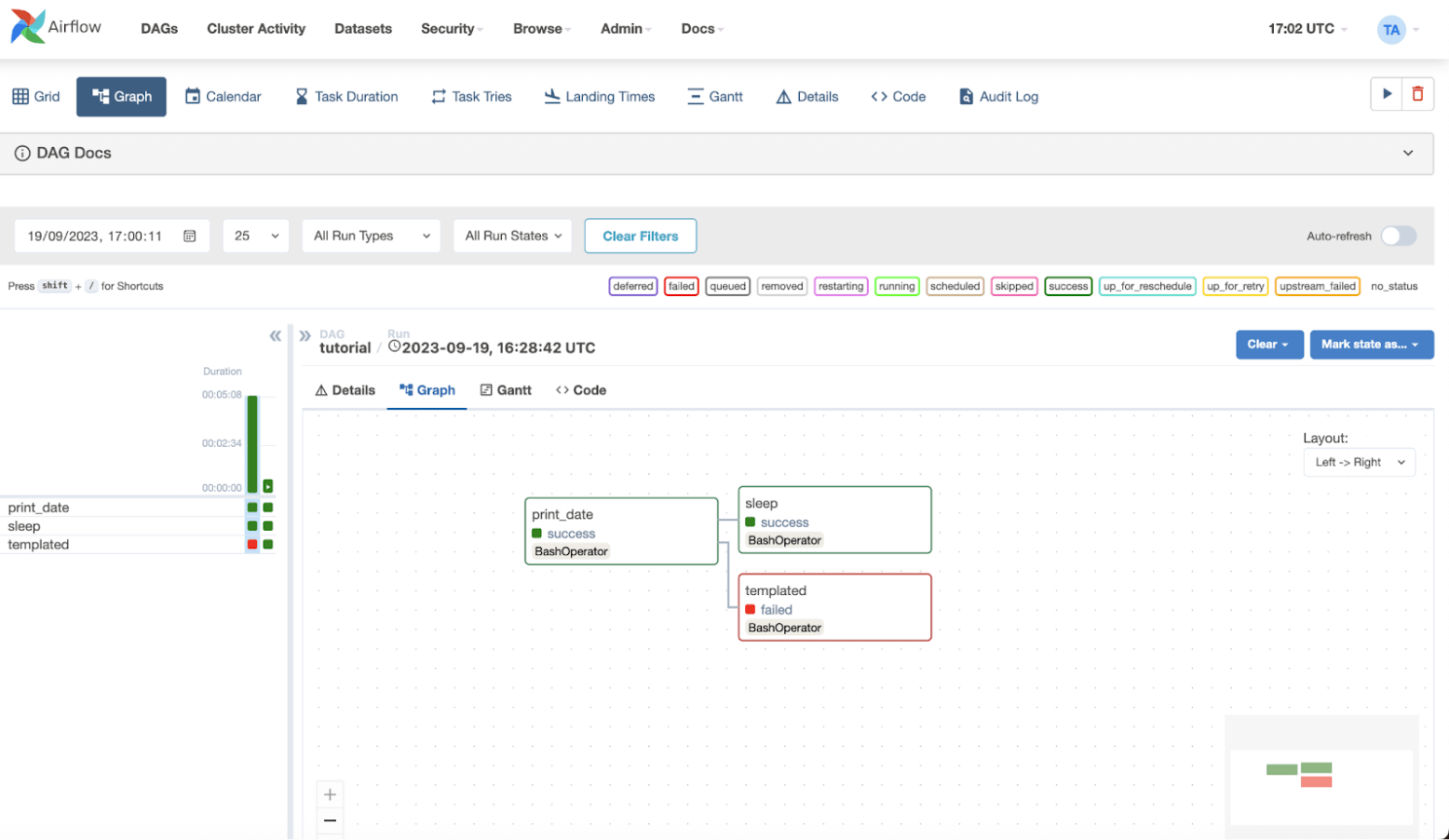

Apache Airflow®

Apache Airflow is an open source platform for orchestrating and managing batch workflows. It allows developers to create, schedule, and monitor workflows written in Python. With its extensible architecture, Airflow integrates with various tools and technologies, enabling dynamic and scalable workflow automation.

License: Apache-2.0

Repository: https://github.com/apache/airflow

GitHub stars: 37K+

Contributors: 3K+

Key features include:

- Workflows as code: Airflow workflows, or DAGs (Directed Acyclic Graphs), are defined in Python, offering dynamic, flexible, and version-controlled pipeline creation. Developers can write and parameterize workflows with full programmatic control using Python libraries and Jinja templating.

- Extensible framework: Airflow’s modular architecture includes a rich set of built-in operators for interacting with various technologies, such as databases, cloud services, and APIs. Custom operators and plugins can be created to fit different needs.

- Powerful scheduling: Supports scheduling workflows at regular intervals, with options like backfilling for rerunning tasks on historical data or manually triggering individual tasks after resolving errors.

- User interface: The web UI offers detailed views of workflows and tasks. It includes Graph and Grid views for visualizing DAGs, task logs for debugging, and features to retry, pause, or monitor pipeline execution.

- Scalability: Supports distributed execution using its Celery executor or Kubernetes executor, enabling it to handle large-scale workflows.

Source: Apache Airflow

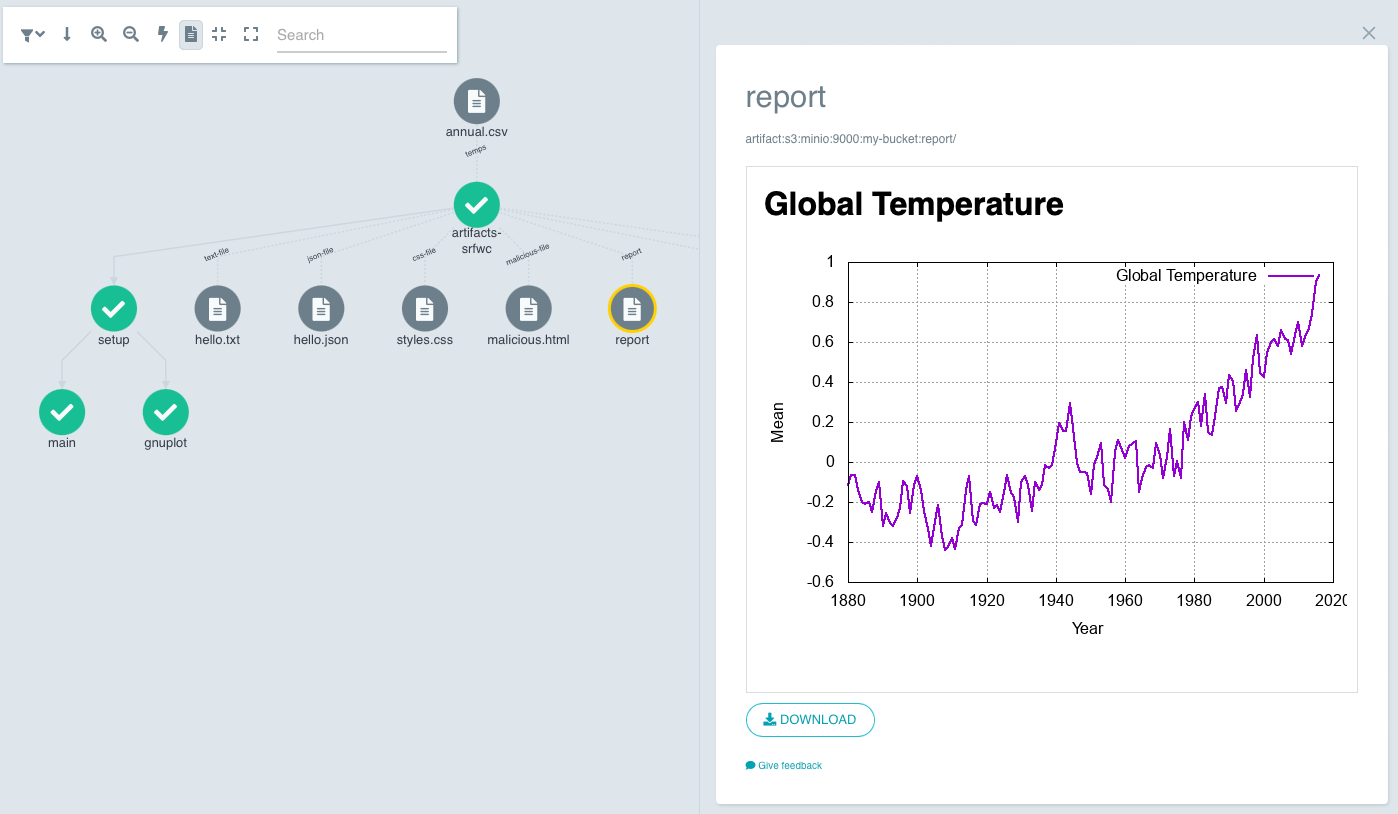

Argo Workflows

Argo Workflows is an open source, container-native workflow engine for orchestrating parallel jobs on Kubernetes. It enables users to define workflows where each step runs as a container, using Kubernetes Custom Resource Definitions (CRDs).

License: Apache-2.0

Repository: https://github.com/argoproj/argo-workflows

GitHub stars: 15K+

Contributors: 800+

Key features include:

- Kubernetes-native design: Built as a Kubernetes CRD, Argo Workflows integrates natively with Kubernetes, making it lightweight and efficient without the need for legacy VM-based environments.

- DAG and step-based workflow modeling: Supports both DAG-based workflows to define task dependencies and step-based workflows for sequential task execution.

- Scalability and parallelism: Efficiently runs parallel and compute-intensive jobs, making it suitable for machine learning, data pipelines, and infrastructure automation.

- Rich artifact support: Offers integration with storage backends such as S3, GCS, Azure Blob Storage, and Git for managing workflow artifacts.

- Templating and reusability: Allows users to define workflow templates, enabling the reuse of common patterns across workflows in the cluster.

Source: Argo Workflows

Prefect

Prefect is an open source workflow orchestration framework for building and managing data pipelines in Python. Aiming to convert simple scripts into production-grade workflows, it helps teams automate data processes with features like scheduling, retries, and caching.

License: Apache-2.0

Repository: https://github.com/PrefectHQ/prefect

GitHub stars: 17K+

Contributors: 300+

Key features include:

- Python-native orchestration: Workflows, referred to as flows, are created using Python decorators, enabling developers to build pipelines with minimal code.

- Dynamic and resilient pipelines: Workflows can adapt to changing conditions and recover from unexpected failures using built-in features like retries, caching, and logging.

- Flexible deployment options: Can run workflows locally, on-premises, or via Prefect Cloud, which offers a fully managed, scalable orchestration platform.

- Event-driven workflows: Supports workflows that respond to triggers such as external events, schedules, or manual inputs.

- Rich scheduling capabilities: Schedules workflows with cron expressions or intervals, and helps monitor their execution through the UI.

Source: Prefect

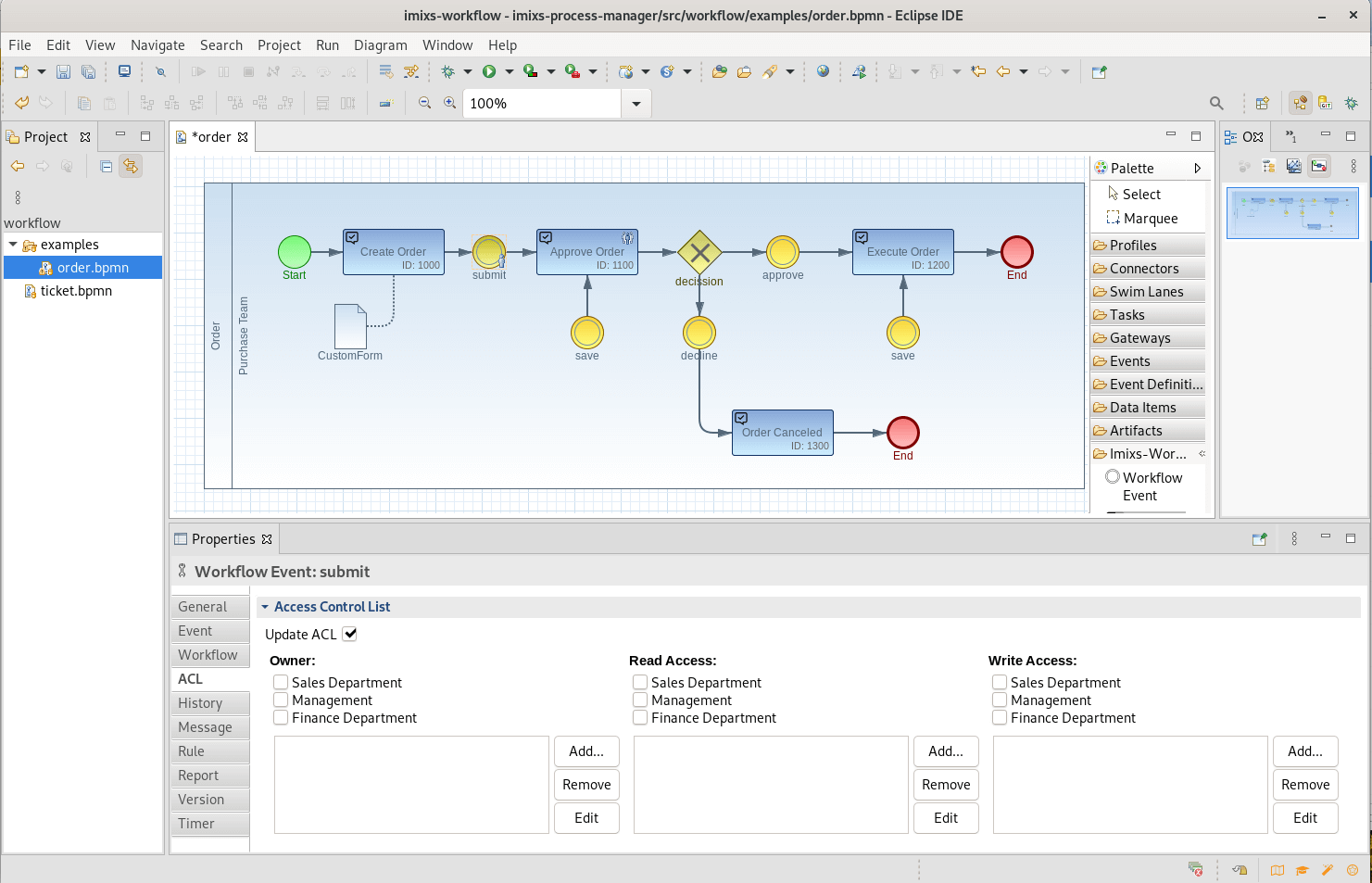

Imixs-Workflow

Imixs-Workflow is an open source workflow engine based on the BPMN 2.0 standard. It automates complex business processes, improves productivity, and simplifies enterprise workflows. Its modular architecture and APIs allow integration of BPMN 2.0 into business applications.

License: GPL-3.0

Repository: https://github.com/imixs/imixs-workflow

GitHub stars: 300+

Contributors: <10

Key features include:

- BPMN 2.0 support: Offers support for the BPMN 2.0 standard, enabling the creation of event-driven, human-centric workflows. Business logic can be modified at runtime without the need for code changes.

- Multi-level security: Implements a fine-grained access control mechanism embedded directly into BPMN models.

- RESTful API and microservices: Provides a flexible REST Service API for integration into application architectures. Can also run as a microservice in a Docker container.

- Event-driven architecture: Uses an event-driven modeling concept to manage and trigger workflows, ensuring dynamic and responsive process handling.

- Customizability and extensibility: Includes a range of APIs and plug-ins to extend its functionality and integrate into existing systems.

Source: Imixs

Instaclustr for Cadence: Seamless data orchestration

Instaclustr empowers businesses to harness the full potential of their data infrastructure with confidence and precision. By offering a fully managed platform for open source technologies, Instaclustr ensures organizations can focus on driving business forward while Instaclustr handles the complexities of infrastructure and data orchestration.

With the addition of support for Cadence, a powerful workflow orchestration engine, Instaclustr further streamlines data-driven operations, enabling enterprises to build and manage complex applications with ease.

Instaclustr’s expertise lies in delivering reliable, scalable solutions for mission-critical applications. Supporting Cadence allows organizations to orchestrate workflows that enhance operational efficiency while ensuring scalability and resilience.

Whether unifying distributed systems or automating highly complex processes, Instaclustr ensures that the infrastructure backbone remains solid, secure, and optimized.

Capabilities of Instaclustr for Cadence:

- Fully managed service: Instaclustr takes care of provisioning, monitoring, and supporting your Cadence workflows, so organizations can focus on development and innovation.

- 24×7 expert support: Instaclsutr’s team of open source technology specialists provides around-the-clock support, ensuring minimized downtime and maximized performance.

- Seamless scalability: Easily scale workflow orchestration infrastructure to match evolving business demands, without compromising performance or reliability.

- 100% open source: Built on a commitment to open source technologies, Instaclustr ensures full transparency, cost efficiency, and freedom from vendor lock-in.

- Integrated security: Enterprise-grade security with compliance and safeguarding measures protects data and systems.

Proven reliability: With optimized uptime and proactive monitoring, Instaclustr guarantees the dependable performance of Cadence workflows.

Key benefits:

- Streamline complexity: Simplify the management of distributed systems and multi-cloud workflows without the need for extensive infrastructure expertise.

- Boost productivity: Free teams from administrative burdens and allow them to dedicate time to core business priorities.

- Accelerate time-to-market: Leverage fully managed workflows to reduce development cycles and innovate faster.

- Achieve cost efficiency: Optimize resources with a scalable, pay-as-you-grow model tailored to business needs.

Instaclustr for Cadence simplifies the intricacies of workflow orchestration and open source management. It provides peace of mind with enterprise-grade reliability, security, and support for Cadence environment. Achieve the full potential of applications with Instaclustr as a trusted guide for scalable, efficient, and cutting-edge data solutions.

For more information: