What is Apache Kafka?

Apache Kafka is an open-source stream-processing software platform developed by LinkedIn and donated to the Apache Software Foundation. It is integral to modern data pipelines, supporting use cases such as log aggregation, real-time analytics, and event sourcing.

Kafka handles real-time data feeds with high throughput, low latency, and fault tolerance. Kafka operates as a distributed commit log, allowing applications to publish and subscribe to large streams of messages in a scalable and durable manner. It is well-suited for scenarios where capturing data streams for long-term storage and processing is critical.

Kafka’s architecture includes brokers, producers, consumers, and a distributed commit log. Brokers handle data replication and storage, ensuring durability and fault tolerance. Producers send streams of data to Kafka topics, while consumers read and process this data. This architecture supports horizontal scalability, handling large datasets efficiently.

Benefits of Apache Kafka

Apache Kafka offers benefits that make it a key component of modern data infrastructure. First, it provides high throughput and low latency, enabling real-time data processing. Kafka handles millions of messages per second, making it suitable for applications requiring rapid data ingestion and processing. Its distributed nature ensures high availability, as data is replicated across multiple nodes, preventing single points of failure and ensuring system resilience.

Kafka’s scalability is another advantage. It can efficiently scale horizontally by adding more brokers to the cluster, accommodating increased data volumes and processing demands. The platform also supports diverse data integration capabilities, allowing integration with various data sources and sinks, including databases, cloud storage, and big data frameworks like Hadoop and Spark.

Additionally, Kafka’s strong durability guarantees ensure that data is safely stored and retrievable even in the event of system failures, making it reliable for critical applications.

Learn more in our detailed guide to Kafka management.

Key use cases of Apache Kafka

Apache Kafka’s versatility and robustness make it ideal for a variety of use cases across different industries. Here are some of the key use cases:

- Real-time analytics: Organizations leverage Kafka for real-time data analytics to gain immediate insights. By streaming data from various sources into a Kafka cluster, companies can process and analyze the data in real time, enabling quick decision-making. This is particularly useful in monitoring systems, fraud detection, and online recommendations.

- Log aggregation: Kafka serves as a centralized platform for collecting logs from multiple systems. By consolidating logs into a single Kafka cluster, organizations can simplify log processing, making it easier to search, analyze, and store log data. This approach enhances system monitoring and debugging capabilities.

- Event sourcing: In event-driven architectures, Kafka is used to capture and store every change to an application’s state as an immutable event. This allows for a complete replay of events to reconstruct past states, ensuring data consistency and reliability. It’s widely used in financial systems, order management, and customer behavior tracking.

- Data integration: Kafka acts as a data pipeline to facilitate data integration across different systems. It can ingest data from various sources, such as databases, applications, and sensors, and then stream it to downstream systems for further processing and storage. This is crucial for building scalable and efficient data architectures.

- Stream processing: Kafka, combined with stream processing frameworks like Apache Flink, Apache Storm, and Kafka Streams, enables real-time processing of data streams. This capability is essential for applications that require continuous processing and analysis of incoming data, such as sensor data processing, anomaly detection, and real-time dashboards.

- Messaging: Kafka’s messaging system supports asynchronous communication between microservices. It can handle large volumes of messages with high throughput and low latency, ensuring reliable and scalable message delivery. This makes Kafka an ideal choice for microservices architectures, enhancing their reliability and performance.

Related content: Read our guide to Kafka management

Tips from the expert

Andrew Mills

Senior Solution Architect

Andrew Mills is an industry leader with extensive experience in open source data solutions and a proven track record in integrating and managing Apache Kafka and other event-driven architectures.

In my experience, here are tips that can help you better utilize Apache Kafka:

- Partition your topics effectively: Distribute partitions across brokers to balance the load. Consider key-based partitioning to ensure related messages are processed together, which is crucial for maintaining data integrity in some applications.

- Implement monitoring and alerting: Set up robust monitoring using tools like Prometheus and Grafana. Track key metrics such as producer latency, consumer lag, and broker health to proactively address performance and reliability issues.

- Secure your Kafka cluster: Use TLS for encrypting data in transit and implement SASL for authentication. Properly configure ACLs to control access and ensure that only authorized applications and individuals can produce or consume messages.

- Optimize batch sizes and linger time: Adjusting the batch size and linger time for producers can significantly impact throughput and latency. Larger batch sizes improve throughput, while increasing linger time can help collect more records per batch.

Tutorial for beginners: Getting started with Apache Kafka

This tutorial is adapted from the official Kafka documentation.

Step 1: Get Kafka

To start with Apache Kafka, download the latest Kafka release from the Kafka download page and extract it using the following commands:

|

1 2 3 |

wget https://dlcdn.apache.org/kafka/3.7.1/kafka_2.13-3.7.1.tgz $ tar -xzf kafka_2.13-3.7.1.tgz $ cd kafka_2.13-3.7.1 |

Step 2: Initialize Kafka

To set up your Kafka environment, ensure your system has Java 8 or above installed. Kafka can be started using either ZooKeeper, KRaft, or a Docker image. ZooKeeper is deprecated and not recommended for use in new clusters.

Starting Kafka with KRaft:

Generate a Cluster UUID:

|

1 |

$ export KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)" |

Format the log directories:

|

1 |

$ bin/kafka-storage.sh format -t $KAFKA_CLUSTER_ID -c config/kraft/server.properties |

Start the Kafka server:

|

1 |

$ bin/kafka-server-start.sh config/kraft/server.properties |

Starting Kafka using a Docker image:

Pull the Kafka Docker image:

|

1 |

$ docker pull apache/kafka:3.7.1 |

Run the Kafka Docker container:

|

1 |

$ docker run -p 9092:9092 apache/kafka:3.7.1 |

Step 3: Create a topic and write events

Before writing events to Kafka, create a topic to store them. In a new terminal session, run:

|

1 |

$ bin/kafka-topics.sh --create --topic my-events --bootstrap-server localhost:9092 |

To view details of the created topic:

|

1 |

$ bin/kafka-topics.sh --describe --topic my-events --bootstrap-server localhost:9092 |

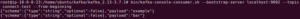

The output should look something like:

Use the console producer client to write events to your topic:

|

1 |

$ bin/kafka-console-producer.sh --topic my-events --bootstrap-server localhost:9092 |

Type your messages, each resulting in an event:

|

1 2 |

Example of one event Example of another event |

Stop the producer client with Ctrl-C.

Step 4: Read the Events

Open a new terminal session and use the console consumer client to read the events:

|

1 |

$ bin/kafka-console-consumer.sh --topic my-events --from-beginning --bootstrap-server localhost:9092 |

You should see:

|

1 2 |

Example of one event Example of another event |

Stop the producer client with Ctrl-C.

Step 5: Use Kafka Connect to import/export data and process with Kafka streams

Kafka Connect allows continuous data integration from external systems. First, ensure you have connect-file-<VERSION>.jar in the plugin path (you can find this file inside your Kafka directory, libs subfolder). Edit the config/connect-standalone.properties file as follows:

|

1 |

echo "plugin.path=libs/connect-file-3.7.1.jar" >> config/connect-standalone.properties |

Create seed data:

|

1 |

echo -e "example\nbar" > myfile.txt |

Start the connectors in standalone mode:

|

1 2 3 |

bin/connect-standalone.sh config/connect-standalone.properties config/connect-file-source.properties config/connect-file-sink.properties |

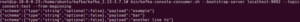

The output should look something like:

Verify the data in the output file using this command:

|

1 |

more myfile.sink.txt |

![]()

You can also use the console consumer to verify:

|

1 2 |

bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic connect-test --from-beginning |

Add more data to myfile.txt:

|

1 |

echo Another line >> myfile.txt |

Let’s implement a simple filtering algorithm that processes data stored in Kafka. This example will filter out any lines containing the word “filter” and write the rest to an output topic.

Note: You will need to create a complete application using Maven/Gradle in order to run the code snippet.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

StreamsBuilder builder = new StreamsBuilder(); KStream<String, String> textLines = builder.stream("my-events"); // Filter out lines containing the word "filter" KStream<String, String> filteredLines = textLines.filter( (key, value) -> !value.toLowerCase().contains("filter") ); // Send the filtered data to a new topic filteredLines.to("output-topic", Produced.with(Serdes.String(), Serdes.String())); KafkaStreams streams = new KafkaStreams(builder.build(), getStreamsConfig()); streams.start(); |

In this example:

- We create a

StreamsBuilderinstance. - We read from the

my-eventstopic usingbuilder.stream("my-events"). - We apply a filter to remove any lines containing the word “filter”.

- The filtered lines are written to the

output-topic.

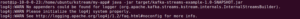

To test above functionality you will need to open three terminals:

|

1 |

java -jar target/kafka-streams-example-1.0-SNAPSHOT.jar |

On terminal number 2, start the producer using the following command:

|

1 2 |

bin/kafka-console-producer.sh --topic my-events --bootstrap-server localhost:9092 |

On terminal number 3, start the consumer with the topic ‘output-topic’ this is where our Java code forwards the filtered messages:

|

1 2 |

bin/kafka-console-consumer.sh --topic output-topic --from-beginning --bootstrap-server localhost:9092 |

You will notice that any line that contains the word “filter” are removed from the output-topic as shown in the above screenshot.

Learn more about Kafka architecture

Simplifying Apache Kafka management with Instaclustr

Apache Kafka has emerged as a popular distributed streaming platform for building real-time data pipelines and streaming applications. However, managing and operating Kafka clusters can be complex and resource-intensive. This is where Instaclustr comes into play.

Instaclustr simplifies Apache Kafka management and helps organizations focus on their core business objectives. Within few clicks or API calls, you can provision Kafka clusters on your preferred cloud provider or on-premises infrastructure. This convenience extends to real-time monitoring, where you get immediate insights into your cluster metrics, such as throughput, latency, and partition lag. Furthermore, Instaclustr shoulders the responsibility of keeping your Kafka clusters updated, handling automated maintenance, and applying patches as required.

- Simple Deployment: Instaclustr provides a seamless experience for deploying Apache Kafka clusters on your desired platform.

- Real-Time Monitoring: Comprehensive monitoring capabilities provide insights into cluster metrics to ensure smooth operations.

- Maintenance Ease: Instaclustr takes care of automated maintenance, upgrades, and ensures your clusters run on the latest stable versions.

Not only does Instaclustr simplify management complexities, but it also helps you enhance data security. Encryption at rest and in transit, access controls, authentication mechanisms, and assistance in meeting compliance requirements are all part of the robust security features Instaclustr offers. Additionally, it ensures data resilience through built-in disaster recovery and high availability features, minimizing the risk of data loss.

In essence, Instaclustr offers a comprehensive managed service for Apache Kafka, enabling businesses to harness the power of Kafka without the hassle of infrastructure management. Reduce costs, enhance security, and streamline operations with Instaclustr.

For more information visit our Instaclustr for Apache Kafka page or check out the following blogs: