What Are Incremental Repairs?

Repairs allow Apache Cassandra users to fix inconsistencies in writes between different nodes in the same cluster. These inconsistencies can happen when one or more nodes fail. Because of Cassandra’s peer-to-peer architecture, Cassandra will continue to function (and return correct results within the promises of the consistency level used), but eventually, these inconsistencies will still need to be resolved. Not repairing, and therefore allowing data to remain inconsistent, creates significant risk of incorrect results arising when major operations such as node replacements occur. Cassandra repairs compare data sets and synchronize data between nodes.

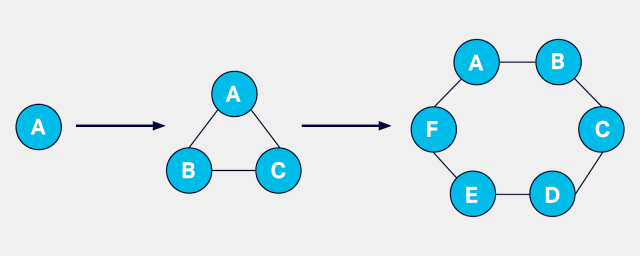

Cassandra has a peer-to-peer architecture. Each node is connected to all the other nodes in the cluster, so there is no single point of failure in the system should one node fail.

As described in the Cassandra documentation on repairs, full repairs look at all of the data being repaired in the token range (in Cassandra, partition keys are converted to a token value using a hash function). Incremental repairs, on the other hand, look only at the data that’s been written since the last incremental repair. By using incremental repairs on a regular basis, Cassandra operators can reduce the time it takes to complete repairs.

A History of Incremental Repairs

Incremental repairs have been a feature of Apache Cassandra since the release of Cassandra 2.2. At the time 2.2 was released, incremental repairs were made the default repair mechanism for Cassandra.

But by the time of the release of Cassandra 3.0 and 3.11, incremental repairs were no longer recommended to the user community due to various bugs and inconsistencies. Fortunately, Cassandra 4.0 has changed this by resolving many of these bugs.

Resolving Cassandra Incremental Repair Issues

One such bug was related to how Cassandra marks which SSTables have been repaired (ultimately this bug was addressed in Cassandra-9143). This bug would result in overstreaming and essentially plug communication channels in Cassandra and slow down the entire system.

Another fix was in Cassandra-10446. This allows for forced repairs even when some replicas are down. It is also now possible to run incremental repairs when nodes are down (this was addressed in Cassandra-13818).

Other changes came with Cassandra-14939, including:

- The user can see if pending repair data exists for a specific token range

- The user can force the promotion or demotion of data for completed sessions rather than waiting for compaction

- The user can get the most recent repairedAT timestamp for a specific token range

Incremental repairs are the default repair option in Cassandra. To run incremental repairs, use the following command:

|

1 |

nodetool repair |

If you want to run a full repair instead, use the following command:

|

1 |

nodetool repair --full |

Similar to Cassandra 4.0 diagnostic repairs, incremental repairs are intended primarily for nodes in a self-support Cassandra cluster. If you are an Instaclustr Managed Cassandra customer, repairs are included as a part of your deployment, which means you don’t need to worry about day-to-day repair tasks like this.

In Summary

While these improvements should greatly increase the reliability of incremental repairs, we recommend a cautious approach to enabling in production, particularly if you have been running subrange repair on an existing cluster. Repairs are a complex operation and the impact of different approaches can depend significantly on the state of your cluster when you start the operation and even the data model that you are using. To learn more about Cassandra 4.0, contact our Cassandra experts for a free consultation or sign up for a free trial of Instaclustr Managed Cassandra service.

Whether you’re looking for a complete managed solution, or are in need of enterprise support or consulting services, we’re here to help.