What is ClickHouse?

ClickHouse is an open source columnar database management system for online analytical processing (OLAP) and real-time data analytics. Developed by Yandex, it handles large volumes of data with high-speed query performance. The system reduces data processing time while increasing query execution speed.

The efficiency of ClickHouse stems from its columnar design, which allows for data compression and optimization, significantly accelerating data retrieval processes. This architecture is particularly useful for querying data where only specific columns are required, saving time and computing resources. ClickHouse also supports SQL queries, making it accessible and familiar to users with SQL experience.

This is part of an extensive series of guides about data security.

ClickHouse products

ClickHouse offers three variants of its offering: an open source database, a cloud service and a Bring Your Own Cloud (BYOC) solution.

ClickHouse (Open Source Database)

ClickHouse is a high-performance, open source columnar database for real-time analytical processing. It processes large datasets and runs complex SQL queries at high speed, using its columnar architecture to achieve significantly faster query performance.

One of the key strengths of ClickHouse is its scalability. It can scale both vertically, by adding more resources to a single machine, and horizontally, across clusters with hundreds or even thousands of nodes. This makes it suitable for everything from small projects running on a laptop to massive deployments handling trillions of rows of data.

The database is feature-rich, supporting advanced SQL operations like joins and federated queries, while maintaining reliability with asynchronous replication and multi-datacenter deployment capabilities.

ClickHouse Cloud

ClickHouse Cloud is a managed, serverless version of the ClickHouse database, offering the same high-speed performance as the open source version but without the need for users to manage infrastructure. It allows users to deploy a ClickHouse environment in seconds, scaling automatically to match workload demands without manual intervention.

This eliminates the operational overhead of tasks like sizing, scaling, and upgrades, enabling developers to focus on data insights instead of infrastructure management. With features like an interactive SQL console, ClickHouse Cloud provides an easy interface for connecting, querying, and visualizing data, making it accessible for users without deep technical expertise.

It also ensures secure data handling, being SOC 2 Type II compliant, and offers automated backups, replication, and disaster recovery to ensure high availability and reliability.

Bring Your Own Cloud (Private Preview)

Bring Your Own Cloud (BYOC) is a ClickHouse Cloud deployment model currently available in private preview on AWS. It allows users to run a fully managed version of ClickHouse within their own AWS environment, offering the benefits of cloud infrastructure while ensuring compliance with strict data residency and regulatory requirements.

By using the BYOC model, organizations maintain control over their data within their own Virtual Private Cloud (VPC), eliminating potential concerns about data transfer and compliance.

ClickHouse architecture

ClickHouse is a columnar database management system optimized for real-time analytics. Its architecture is designed to execute queries at high speed by leveraging vectorized processing and efficient use of CPU and memory.

Below are the core components of the ClickHouse architecture:

- Column-oriented storage: Data is stored in columns rather than rows, allowing for more efficient data retrieval and compression, particularly for analytical queries where only specific columns are needed.

- Vectorized query execution: Queries are processed in batches of data (arrays or vectors), enabling faster execution by minimizing the overhead of handling individual values and maximizing CPU efficiency through SIMD (single instruction, multiple data) operations.

- IColumn interface: Columns are represented in memory using the IColumn interface, which allows relational operators to be applied without modifying the original data. Various implementations, such as

ColumnUInt8andColumnString, handle different data types efficiently in memory. - Field representation: While ClickHouse primarily works with columns, it also provides the Field class for individual value manipulation, supporting types like integers, floats, strings, and arrays. However, this approach is less efficient for bulk processing.

- IDataType interface: Responsible for serialization and deserialization of data, IDataType defines how data types like

UInt32orDateTimeare handled in binary and text formats, enabling efficient storage and retrieval. - Block structure: Blocks are containers that hold chunks of data during query execution. Each block contains a set of columns with their respective data types and names. Operations on data are applied immutably, adding new columns without modifying existing ones.

- Processors: Processors handle the flow of data within queries. They execute operations like filtering, aggregation, or transformation, allowing ClickHouse to process large datasets in parallel.

- I/O buffers: ClickHouse uses ReadBuffer and WriteBuffer classes for handling input and output operations at the byte level. These buffers are optimized for performance, handling tasks like file reading, compression, and network communication efficiently.

- Tables and storage engines: The IStorage interface represents tables in ClickHouse, with different storage engines like

MergeTreehandling data organization and retrieval. Replication and other advanced features are managed by specific implementations likeReplicatedMergeTree. - Distributed query execution: In cluster setups, ClickHouse can distribute queries across multiple nodes, minimizing network overhead by processing data locally and merging results centrally.

ClickHouse use cases

Real-time analytics

Organizations use ClickHouse for applications needing rapid data aggregation and reporting, such as financial systems, IoT analytics, and website performance tracking. The ability to analyze non-aggregated data on-the-fly meets the demands of organizations requiring immediate insights to inform critical decision-making.

The columnar database’s design and distributed architecture allow concurrent workloads, essential for real-time data streams that include multiple query requests. It supports time-series data analysis and event sourcing, optimized for fast query responses even with complex queries over vast datasets.

Machine learning and GenAI

ClickHouse offers support for machine learning (ML) and generative AI (GenAI) workloads, with capabilities that enable modeling and data preprocessing. By efficiently handling large datasets, ClickHouse enables the preparation and transformation of data for training ML models. It supports advanced queries and aggregations for feature engineering.

Data scientists and ML engineers use it to develop and refine predictive models. The database’s performance optimizations ensure ML pipelines run smoothly, providing the speed needed for quick iterations and adjustments. Users can perform exploratory data analysis (EDA) directly within ClickHouse, helping identify patterns and correlations critical for model accuracy.

Business intelligence

Business intelligence (BI) applications benefit from ClickHouse’s ability to handle large-scale data processing and deliver fast query results. It supports dashboards and data visualizations, offering users near real-time insights into operational metrics and KPIs. BI tools integrated with ClickHouse allow users to analyze trends, patterns, and historical data.

The fast execution speeds and parallel processing capabilities ensure the swift generation of reports, supporting various business functions like sales forecasting, supply chain optimization, and customer analytics. With dynamic, ad-hoc queries, ClickHouse allows business users to explore data autonomously, derive actionable insights, and adapt strategies.

Logs, events, and traces

ClickHouse is useful for managing logs, events, and traces, supporting systems that require real-time analysis of operation and performance data. It helps track application behavior, diagnose issues, and improve system performance by analyzing vast amounts of logs quickly.

For example, ClickHouse is used in observability stacks, where rapid indexing and querying of logs and traces are critical for maintaining smooth operations and preemptively addressing potential disruptions. The database’s ability to handle structured and semi-structured data efficiently makes it useful in environments with extensive logging and monitoring demands.

Tips from the expert

Merlin Walter

Solution Engineer

With over 10 years in the IT industry, Merlin Walter stands out as a strategic and empathetic leader, integrating open source data solutions with innovations and exhibiting an unwavering focus on AI's transformative potential.

In my experience, here are tips that can help you better utilize ClickHouse for large-scale analytics and high-performance workloads:

- Compress data using optimal codecs: ClickHouse offers various compression codecs like ZSTD, LZ4, and Delta. Choose codecs based on your data patterns (e.g., Delta for numeric time-series data) to minimize storage footprint and boost query speed.

- Avoid overusing partition keys: In most situations, a partition key is unnecessary, and if you do need one, partitioning by month is often sufficient.

- Limit partition granularity: Avoid setting highly specific partitions, such as by individual client IDs or names. Instead, include the client ID or name as the first column in your ORDER BY clause for better performance.

- Use query execution plans to improve performance: Utilize the EXPLAIN command to review how ClickHouse executes queries. This analysis can reveal if excessive table scans or inefficient joins are causing unnecessary data retrieval, allowing you to optimize accordingly.

ClickHouse Cloud pricing

ClickHouse Cloud offers three pricing tiers tailored to different workloads. Each pricing plan also offers a free 30-day trial.

Development:

- Suitable for smaller workloads, pricing ranges from $1 to $193 per month, depending on usage.

- Includes up to 1 TB of storage, 16 GiB of memory, and a burstable CPU.

- Features include daily backups retained for 1 day, replication across two availability zones (AZs), and expert support with a 1-day response time.

- Additional features like AWS Private Link and automatic scaling are available, but certain advanced options like customer-managed encryption keys (CMEK) are excluded.

- Storage costs $35.33 per TB per month, and compute is priced at $0.2160 per unit per hour (with one unit equaling 16 GiB RAM and 2 vCPUs).

Production:

- Provides unlimited storage and 24 GiB or more of memory, along with a dedicated CPU.

- Backups are retained for 2 days, and data is replicated across three AZs for enhanced availability.

- Users receive 24/7 support for critical issues, automatic scaling, and more advanced features like customer-managed encryption keys.

- Storage is priced at $47.08 per TB per month, while compute costs $0.6894 per unit per hour (one unit equaling 24 GiB RAM and 6 vCPUs).

Dedicated:

- Suitable for the demanding, latency-sensitive workloads, this tier offers capacity-based pricing.

- Features include custom compute options, a dedicated environment, advanced security, scheduled upgrades, and uptime SLAs.

- Customers benefit from consultative migration guidance and a named lead support engineer.

- Pricing details are available on request.

Tutorial: ClickHouse open source quick start

This tutorial provides a step-by-step guide to setting up ClickHouse locally and executing basic operations, from downloading the binary to running SQL queries. These instructions are adapted from the official documentation.

1. Download the binary

ClickHouse runs natively on Linux, FreeBSD, and macOS. For Windows, you can use WSL (Windows Subsystem for Linux). To download and install the appropriate binary for your system, simply run the following command in the terminal:

|

1 |

curl https://clickhouse.com/ | sh |

This command automatically determines the operating system and downloads the correct version of ClickHouse.

2. Start the server

After downloading the binary, navigate to its location and start the ClickHouse server with the following command:

|

1 |

./clickhouse server |

3. Connect using the client

Open a new terminal window and run the ClickHouse client to connect to the server. Change to the directory where the binary is located and execute:

|

1 |

./clickhouse client |

Upon successful connection, you’ll see a smiling face in the terminal, indicating that the ClickHouse client is ready for queries.

4. Create a table

To create a table, you can use standard SQL DDL commands with an additional ENGINE clause. Here’s an example that defines a basic table using the MergeTree engine:

|

1 2 3 4 5 6 7 8 9 10 |

CREATE TABLE my_example_table ( user_id UInt32, message String, timestamp DateTime, metric Float32 ) ENGINE = MergeTree PRIMARY KEY (user_id, timestamp); |

h3>5. Add data to the table

ClickHouse supports the familiar INSERT INTO command, but to optimize performance, it’s recommended to insert data in bulk. For example:

|

1 2 3 4 5 6 |

INSERT INTO website_visits (user_id, page_url, visit_time, duration_seconds) VALUES (201, 'https://example.com/home', now(), 120), (202, 'https://example.com/about', yesterday(), 45), (203, 'https://example.com/contact', today(), 15), (201, 'https://example.com/products', now() + 60, 180); |

6. Query the table

Now that data is inserted, you can run SQL queries to retrieve it. For example, to select all records and order them by timestamp:

|

1 2 3 |

SELECT * FROM my_example_table ORDER BY timestamp; |

The results will be displayed in a formatted table in the terminal.

7. Ingest the latest data</h3<

ClickHouse supports various methods for importing data, such as from S3, GCS, PostgreSQL, MySQL, or local files. Here’s an example of reading data from an S3 file directly into a table:

|

1 2 3 4 5 6 |

INSERT INTO my_table SELECT * FROM s3( 'https://datasets-documentation.s3.eu-west-3.amazonaws.com/nyc-taxi/trips_0.gz', 'TabSeparatedWithNames' ) SETTINGS input_format_allow_errors_num=20000; |

ClickHouse limitations

While ClickHouse offers impressive speed and efficiency for analytical workloads, it has some limitations that users should be aware of:

- Steep learning curve: ClickHouse requires a steep learning curve, especially for users transitioning from traditional databases like MySQL or Oracle. Users must familiarize themselves with ClickHouse-specific features such as partitioning, sharding, and replication, which can be challenging to implement effectively. Zookeeper is required for managing distributed setups, adding another layer of complexity.

- Limited support for updates and deletes: Unlike traditional databases, ClickHouse is not optimized for frequent updates and deletes. It is designed for insert-heavy workloads and performs best when data is appended rather than modified. Users accustomed to handling dynamic datasets in other databases may find ClickHouse’s approach cumbersome, especially when dealing with use cases that require regular data changes.

- Materialized views and custom functions: ClickHouse supports materialized views, which can greatly improve query performance. However, they currently only support single joins, limiting their flexibility in more complex queries. Additionally, the lack of support for custom functions makes it difficult to extend the system’s capabilities based on specific needs. This limitation can frustrate users who want to create tailored functions for more advanced operations.

- Operational complexity: Managing and configuring ClickHouse, particularly in distributed environments, can be difficult. Issues with Zookeeper and replication, as well as the intricacies of managing large clusters, can lead to instability if not handled carefully. This makes it less predictable compared to more mature database systems, which may offer smoother experiences in production setups.

Notable ClickHouse alternatives

Here are a few solutions that are commonly considered as alternatives to ClickHouse.

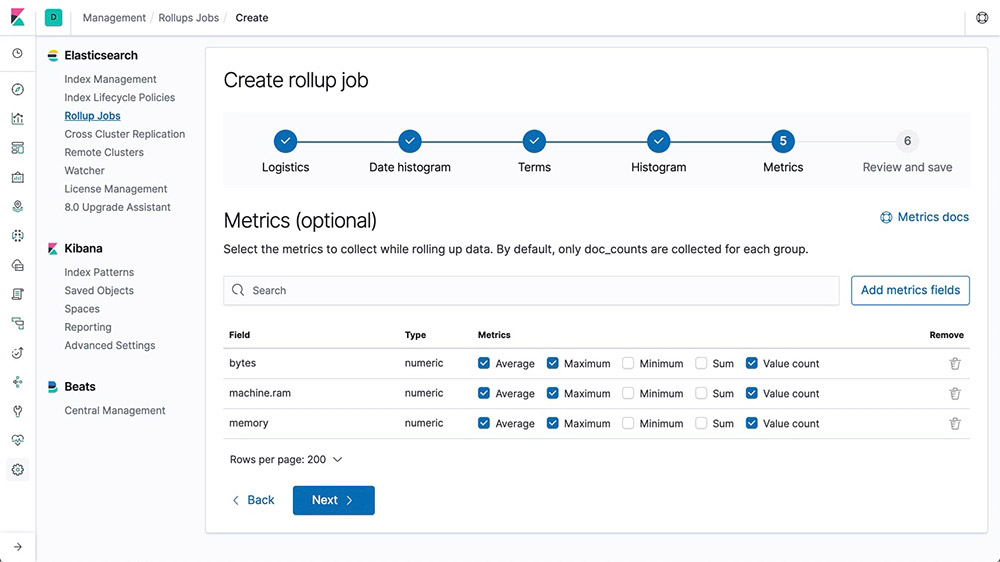

Elasticsearch

Elasticsearch is a distributed, RESTful search and analytics engine that serves as the backbone of the Elastic Stack. It can store, search, and analyze large volumes of data quickly, supporting use cases such as full-text search, log and infrastructure monitoring, and data analysis. It is capable of handling structured, unstructured, and geospatial data.

Key features of Elasticsearch:

- Distributed search engine: Scales horizontally, from a single node to hundreds of nodes, enabling it to handle vast amounts of data and high query volumes.

- Flexible query capabilities: Supports various types of searches, including structured, unstructured, metric, and geo-based queries, giving users the flexibility to ask complex questions of their data.

- Fast performance: Uses optimized data structures like inverted indices and BKD trees, delivering near-instant search results even on massive datasets.

- Aggregations: Allows for the analysis of large-scale data by aggregating and visualizing trends, patterns, and metrics, making it suitable for real-time analytics.

- Elasticsearch relevance engine (ESRE): Designed for AI-based search applications, ESRE enables semantic search, integrates with large language models, and supports hybrid and transformer model searches.

Source: Elastic

Snowflake

Snowflake is a fully managed cloud-based data platform for diverse workloads such as data warehousing, analytics, AI, and application development. It allows organizations to unify, manage, and analyze structured and unstructured data at various scales.

Key features of Snowflake:

Flexible architecture: Its architecture eliminates data silos, allowing seamless access to structured, semi-structured, and unstructured data.

- Elastic compute: Users can dynamically scale compute resources based on the workload, ensuring efficient resource usage for streaming pipelines, analytics, AI, and interactive applications.

- Unified data governance: The Horizon Catalog provides built-in compliance, security, privacy, and collaboration capabilities across all regions, clouds, and workloads.

- Cross-cloud collaboration: Snowgrid enables easy data sharing and collaboration across different clouds without the need for complex ETL processes.

- Optimized storage: Snowflake automatically compresses and manages data, ensuring fast access and secure storage with minimal manual intervention.

Source: Snowflake

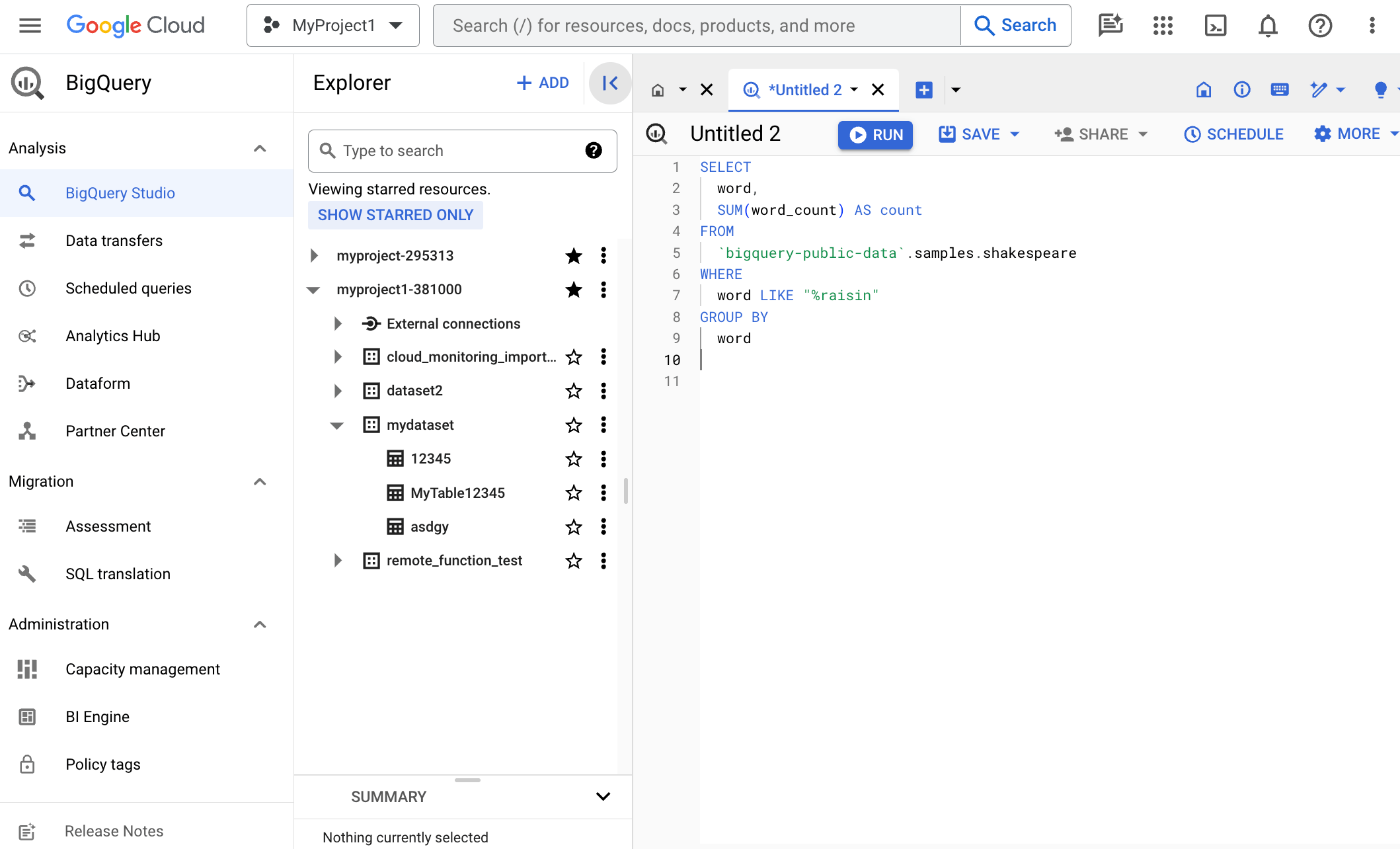

Google Cloud BigQuery

![]()

Google Cloud BigQuery is a fully managed, serverless data platform for fast and scalable data analysis. It supports both structured and unstructured data, allowing organizations to perform advanced analytics, machine learning, and geospatial analysis without the need for infrastructure management.

Key features of BigQuery:

- Serverless architecture: Automatically manages infrastructure, freeing users from provisioning and scaling resources. It allows users to focus solely on analyzing their data.

- Separation of storage and compute: Its architecture separates data storage and processing, ensuring high availability and performance without resource conflicts.

- Built-in machine learning: Users can create and run machine learning models directly on their data without moving it to external tools.

- Streaming and batch data ingestion: Supports both continuous data ingestion through the Storage Write API and batch uploads from various sources, such as Google Cloud Storage and local files.

- ANSI SQL support: Offers full support for SQL, including joins, nested fields, and advanced aggregation, allowing users to query data easily using familiar syntax.

Source: Google

PostgreSQL®

PostgreSQL is an open source object-relational database system known for its reliability, scalability, and extensive feature set. It supports SQL and object-relational functionalities, making it suitable for managing complex data workloads.

Key features of PostgreSQL:

- Data types: Supports a range of data types, including primitive types (Integer, Boolean), structured types (Array, Date/Time), document types (JSON/JSONB, XML), and custom-defined types.

- Data integrity and constraints: Ensures data accuracy through primary keys, foreign keys, unique constraints, and exclusion constraints, helping to maintain robust data relationships.

- Concurrency and performance: Uses Multi-Version Concurrency Control (MVCC) to handle simultaneous transactions without locking issues and offers advanced indexing methods like B-tree, GiST, GIN, and BRIN for optimized query performance.

- Scalability: Efficiently manages large datasets, scaling vertically and horizontally. PostgreSQL can handle terabytes to petabytes of data while accommodating many concurrent users.

- Extensibility: Allows users to define custom data types, functions, and even integrate different procedural languages like PL/pgSQL, Python, Perl, and more through extensions.

Source: PostgreSQL

The benefits of Instaclustr for ClickHouse

Instaclustr provides a range of benefits for ClickHouse, making it an excellent choice for organizations seeking efficient and scalable management of these deployments. With its managed services approach, Instaclustr simplifies the deployment, configuration, and maintenance of ClickHouse, enabling businesses to focus on their core applications and data-driven insights.

Some of these benefits are:

- Infrastructure provisioning, configuration, and security, ensuring that organizations can leverage the power of this columnar database management system without the complexities of managing it internally. By offloading these operational tasks to Instaclustr, organizations can save valuable time and resources, allowing them to focus on utilizing ClickHouse to its full potential.

- Seamless scalability to meet growing demands. With automated scaling capabilities, ClickHouse databases can expand or contract based on workload requirements, ensuring optimal resource utilization and cost efficiency. Instaclustr’s platform actively monitors the health of the ClickHouse cluster and automatically handles scaling processes, allowing organizations to accommodate spikes in traffic and scale their applications effectively.

- High availability and fault tolerance for ClickHouse databases. By employing replication and data distribution techniques, Instaclustr ensures that data is stored redundantly across multiple nodes in the cluster, providing resilience against hardware failures and enabling continuous availability of data. Instaclustr’s platform actively monitors the health of the ClickHouse cluster and automatically handles failover and recovery processes, minimizing downtime and maximizing data availability for ClickHouse deployments.

Furthermore, Instaclustr’s expertise and support are invaluable for ClickHouse databases. Our team of experts has deep knowledge and experience in managing and optimizing ClickHouse deployments. We stay up-to-date with the latest advancements in ClickHouse technologies, ensuring that the platform is compatible with the latest versions and providing customers with access to the latest features and improvements. Instaclustr’s 24/7 support ensures that organizations have the assistance they need to address any ClickHouse-related challenges promptly.

For more information:

See Additional Guides on Key Data Security Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of data security.

Authored by Instaclustr

- [Guide] Quick guide to Apache Spark: Benefits, use cases, and tutorial

- [Guide] Apache Spark architecture: Concepts, components, and best practices

- [Blog] Debugging Jobs in the Apache Spark™ UI

- [Product] NetApp Instaclustr Data Platform | Open-Source Data Infrastructure Platform

Authored by Instaclustr

- [Guide] Complete guide to PostgreSQL: Features, use cases, and tutorial

- [Blog] How to Get the Most out of Your Kafka Cluster

- [Product] NetApp Instaclustr Data Platform

Authored by CyCognito

- [Guide] What Is Continuous Threat Exposure Management (CTEM)?

- [Guide] What Are Security Controls: Types, Functions, and 8 Frameworks to Know

- [WhitePaper] Exposure Management: The Definitive Guidebook for the Security Practitioner

- [Product] CyCognito Attack Surface Management