This is the second part of our “Exploring Karapace—the Open Source Apache Kafka Schema Registry” blog series, where we continue to get up to speed with Platonic Forms (aka Schemas) in the form of Apache Avro, which is one of the Schema Types supported by Karapace. In this part we try out Avro IDL, come up with a Schema for some complex tidal data (and devise a scheme to generate a Schema from POJOs), and perfect our Schema with the addition of an Avro Logical Type for the Date field—thereby achieving close to Platonic perfection (but that’s just an idea).

1. Avro Interface Definition Language (IDL)

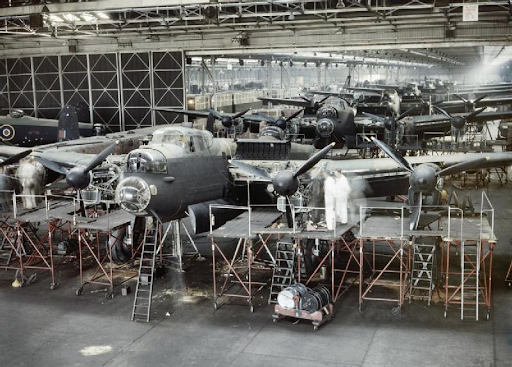

Apache Avro was named after the British AVRO aircraft manufacturer, perhaps most famous for their Avro Lancaster WWII 4-engine heavy bomber. They were big, complex planes but had to be built as quickly as possible, as can be seen in this picture of construction in a factory.

Avro Lancaster bombers being constructed in a factory

Source:(https://upload.wikimedia.org)

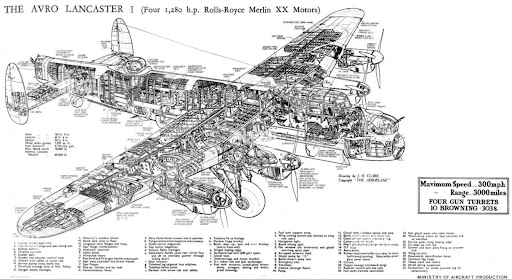

To build them you would need a plan—something to describe or define exactly what and how to build them. Here’s an Avro Lancaster cut-away diagram showing internal details—an early version of an Avro Interface Definition Language (IDL) perhaps:

If you think that the Avro JSON schema definition language that we explored in the previous blog seems a bit verbose and unintelligible, you are not alone! Avro actually has a high-level Interface Definition Language, Avro IDL, which allows you to specify the schema in a Domain Specific Language (DSL), and automatically generate the Avro JSON Schema. Here’s an example using the idl option on the Avro tools commands (java -jar avro-tools-1.11.1.jar idl). It expects an input of the IDL, and generates an output of Avro JSON Schema when you hit Ctrl-D:

Input:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

@namespace("example.avro") protocol MyProtocol { record PlatonicSolid { string figure; int faces; int vertices; union { null, float } volume; } } Output: { "protocol" : "MyProtocol", "namespace" : "example.avro", "types" : [ { "type" : "record", "name" : "PlatonicSolid", "fields" : [ { "name" : "figure", "type" : "string" }, { "name" : "faces", "type" : "int" }, { "name" : "vertices", "type" : "int" }, { "name" : "volume", "type" : [ "null", "float" ] } ] } ], "messages" : { } } |

Apparently the IDL is primarily designed for defining RPC calls, complete with error messages etc. as can be seen by the protocol related fields. A standard Avro Schema can be produced by deleting the protocol, types and messages fields as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

{ "namespace" : "example.avro", "type" : "record", "name" : "PlatonicSolid", "fields" : [ { "name" : "figure", "type" : "string" }, { "name" : "faces", "type" : "int" }, { "name" : "vertices", "type" : "int" }, { "name" : "volume", "type" : [ "null", "float" ] } ] } |

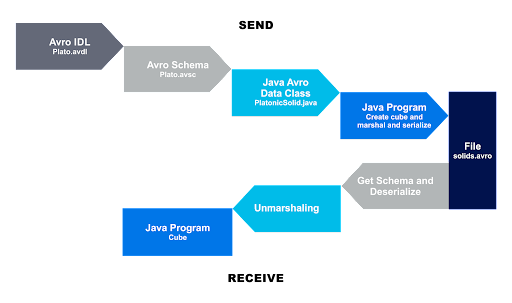

This enables the following full process starting from an IDL file and ending up with data encoded in Avro, and back again to a copy of our original Platonic Solid, the Cube:

2. Avro NOAA Tidal Data Example

Large waves crashing against cliffs at Cape Disappointment on the Washington coast during a King Tide (Source: Shutterstock)

Large waves crashing against cliffs at Cape Disappointment on the Washington coast during a King Tide (Source: Shutterstock)

What does the Avro Schema look like for a more realistic example? Let’s try a Schema for the NOAA tidal data from the previous Kafka Connect real-time data processing pipeline series (part 1 here). Here’s a sample of the tide data in JSON format:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

{ "metadata": { "id": "8724580", "name": "Key West", "lat": "24.5508", "lon": "-81.8081" }, "data": [ { "t": "2020-09-24 04:18", "v": "0.597", "s": "0.005", "f": "1,0,0,0", "q": "p" } ] } |

As you can see, it’s a hierarchical data type with a metadata field, and multiple data fields. Here’s the Avro JSON Schema that I came up with:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 |

{ "type": "record", "name": "TideData", "namespace": "example.avro", "fields": [ { "name": "data", "type": { "type": "array", "items": { "type": "record", "name": "TideData", "fields": [ { "name": "f", "type": "string" }, { "name": "q", "type": "string" }, { "name": "s", "type": "float" }, { "name": "t", "type": "string" }, { "name": "v", "type": "float" } ] }, "java-class": "[Lexample.avro.TideData;" } }, { "name": "metadata", "type": { "type": "record", "name": "MetaData", "fields": [ { "name": "id", "type": "string" }, { "name": "lat", "type": "float" }, { "name": "lon", "type": "float" }, { "name": "name", "type": "string" } ] } } ] } |

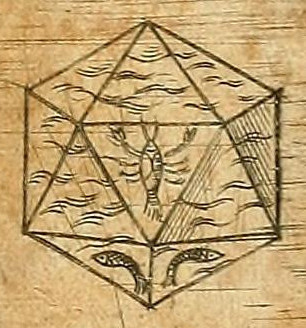

The Platonic Solid Icosahedron (20 faces, 30 edges and 12 vertices) was the form of the element water (Source: https://commons.wikimedia.org)

The Platonic Solid Icosahedron (20 faces, 30 edges and 12 vertices) was the form of the element water (Source: https://commons.wikimedia.org)

3. A POJO-First Schema Generation Approach

You may notice the rather odd looking field: “java-class”: “[Lexample.avro.TideData;”?

This actually gives away the fact that I didn’t really write the schema myself. Instead, I “cheated” and wrote a couple of Java POJO classes that looked about right, and then generated the schema. Here’s the example code to generate the Schema for a POJO (TideData.java, and all the dependent POJOs):

|

1 2 3 4 5 6 7 8 9 10 11 12 |

package example.avro; import org.apache.avro.Schema; import org.apache.avro.reflect.ReflectData; public class GenerateSchemaFromPOJO { public static void main(String [] args) { TideData tide = new TideData(); Schema schema = ReflectData.get().getSchema(tide.getClass()); System.out.println(schema); } } |

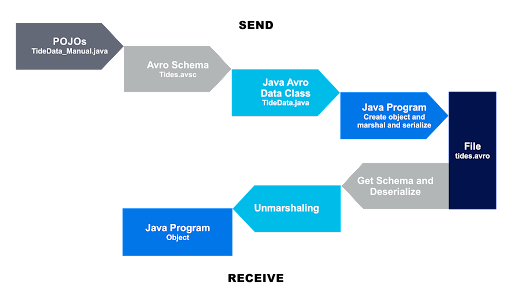

Once you have the generated Avro Schema, you can just delete the java-class line to use it as the starting point for the rest of the process. This Schema worked correctly as the input to the standard Avro serialization pipeline described above (ignoring the IDL stage), and may be a practical alternative to starting with a Schema if you already have existing program code, or you need to have a more code-centric approach to ensure adequate testing and debugging before generating the definitive Schema. Note that you can’t just use manually created Java POJOs as starting points for serializing data, as they are missing all of the Avro specific helper methods.

Here’s the alternative code-first flow:

4. Avro Logical Type Date Example

Finally, I noticed that I’d fudged the “t” field which is actually a date/time data type. I turned it from a string to a date using an Avro logical type as follows:

|

1 |

{ "name": "t", "type": "long", "logicalType": "date" } |

Here’s the final version of the Schema:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 |

{ "type": "record", "name": "TideData", "namespace": "example.avro", "fields": [ { "name": "data", "type": { "type": "array", "items": { "type": "record", "name": "Data", "fields": [ { "name": "f", "type": "string" }, { "name": "q", "type": "string" }, { "name": "s", "type": "float" }, { "name": "t", "type": "long", "logicalType": "date" }, { "name": "v", "type": "float" } ] } } }, { "name": "metadata", "type": { "type": "record", "name": "MetaData", "fields": [ { "name": "id", "type": "string" }, { "name": "lat", "type": "float" }, { "name": "lon", "type": "float" }, { "name": "name", "type": "string" } ] } } ] } |

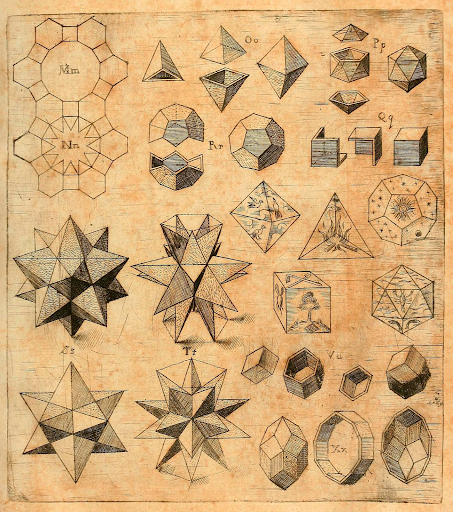

The above image is from Kepler’s book which shows the Platonic solids for all the elements, air, fire, earth, water, and “aether”, the 5th universal element

(Source:https://commons.wikimedia.org)

5. What is Next?

Now it’s time to descend from the lofty realms of Platonic Forms into the murky depths of the sea, where we will encounter terrifying creatures with exoskeletons (Carapaces), such as Crustaceans (Crabs, Lobsters, etc.). In the first 2 parts of this blog series we’ve introduced Apache Avro and have now reached a perfect (well, “good enough”) understanding of Schemas to proceed with our exploration of Karapace, the open source Apache Kafka Schema Registry starting in Part 3.

Why do millions of Christmas Island Red crabs cross the road? To take a dip in the sea, and find a mate! (The Red Crab Migration).

(Source: Shutterstock)

(Source: Shutterstock)

Follow the Karapace Series

- Part 1—Apache Avro Introduction with Platonic Solids

- Part 2—Apache Avro IDL, NOAA Tidal Example, POJOs, and Logical Types

- Part 3—Introduction, Kafka Avro Java Producer and Consumer Example

- Part 4—Auto Register Schemas

- Part 5 —Schema Evolution and Backward Compatibility

- Part 6 —Forward, Transitive, and Full Schema Compatibility

Need help with a complete managed solution, enterprise support or consulting services?