Most science fiction allows for the possibility of both backward and forward time travel, typically using some clever invention (e.g., Police box, car, etc.). In this blog, we explore how Karapace, the open source schema registry for Apache Kafka®, enables many types of schema compatibility including Backward, Forward, and Transitive (time travel, from the start to the end of the universe?). Buckle your seat belts, as time travel is complicated and could introduce some unresolvable paradoxes!

“Back to the Future” – Forward and Backward time travel, by car!

(Source: Shutterstock)

1. All Compatibility Modes

What are all the Karapace schema compatibility options? The valid compatibility values are:

- NONE

- BACKWARD

- FORWARD

- FULL

- BACKWARD_TRANSITIVE

- FORWARD_TRANSITIVE

- FULL_TRANSITIVE

Oddly, there is no option for preventing schema changes, i.e., Plato’s view of Schemas (this is an option for Pulsar). NONE turns off checking and allows any change, the “All is flux”, Heraclitus mode. The default is BACKWARD, but how do you change it? Here’s an example of changing to FORWARD:

1 2 | curl -X PUT -H "Content-Type: application/vnd.schemaregistry.v1+json" -u karapaceuser:karapacepassword --data '{"compatibility": "FORWARD"}' karapaceURL:8085/config {"compatibility": "FORWARD"} |

I repeated my previous BACKWARD compatibility experiments with some of the other modes, including FORWARD, TRANSITIVE, and FULL with results summarized in the following table. The colour key is:

- Green for addition

- Red for deletions

- Orange for changes

- Blue for optional additions

- Pink for deleting optional fields

However, taking into account what we learned in the last blog, which was that you need a default value to actually make an Avro union field optional, I started by registering the following schema:

1 | curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" -u karapaceuser:karapacepassword --data '{"schema": "{\"fields\": [{\"name\": \"figure\", \"type\": \"string\"}, {\"name\": \"faces\", \"type\": \"int\"},{\"name\": \"length\", \"type\": [\"null\", \"float\"], \"default\": null }, {\"name\": \"volume\", \"type\": [\"float\", \"null\"]}], \"name\": \"PlatonicSolid\", \"namespace\": \"example.avro\", \"type\": \"record\"}"}' karapaceURL:8085/subjects/evolution-value/versions |

The results look like this (I also double checked the Backward compatibility results which remained unchanged):

| Change Type | Backward | Forward | Backward Transitive | Forward Transitive | Full |

|---|---|---|---|---|---|

| Add field – no default | No | Yes | No | Yes | No |

| Delete type from union | No | Yes | No | Yes | No |

| Change field name or type | No | No | No | No | No |

| Delete field | Yes | No | Yes | No | No |

| Delete optional field | Yes | Yes | Yes | Yes | Yes |

| Add new value to union field | Yes | Yes | Yes | Yes | Yes |

| Add new optional field with default value | Yes | Yes | Yes | Yes | Yes |

A more concise summary is as follows, indicating if the change is compatible with Backward (←), Forward (→), both (Full compatibility, see below), or neither (X) modes:

| Change Type | Direction |

| Add field – no default | → |

| Delete type from union | → |

| Change field name or type | X |

| Delete field | ← |

| Delete optional field | ← → |

| Add new optional field with default value | ← → |

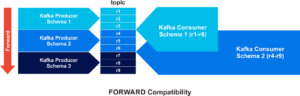

2. What Is Forward Compatibility?

Time travel into the near future with FORWARD compatibility.

(Source: Shutterstock)

First, let’s have a look at Forward compatibility. Apart from deleting and adding optional fields which are both Backward and Forward compatible, Forward compatible changes are complementary to what’s allowed for Backward compatibility, i.e., you can add a field, you can delete a union type, but you cannot delete a field. Why is this?

In the previous blog we discovered that a new schema is backward compatible with the previous schema if a consumer using the new schema can still read records written by the previous schema, i.e., events from the past. Forward compatibility just means that producers using the new schema can write records that consumers using the previous schema can read. Or, flipping it around the other way, consumers with the previous schema can read records produced with the new Forward compatible schema, i.e., events from the future, as shown in this diagram:

So, why are Forward compatible changes the opposite of Backward compatible changes? What we discovered in the last blog is that Avro enabled consumers can successfully read records which contain “surprises”—new fields that they don’t expect, and missing fields—as long as they can create default values. For Forward compatibility, these surprises result from changes to the producer schema only, with the consumer schema remaining unchanged. Adding new producer fields (compulsory and optional), will result in the consumer with the previous schema just ignoring them, and deleting optional fields is also fine, as the consumers will create them with default values. In terms of the elephants, we saw in the previous blog, if the producer creates elephants when the consumer is not expecting them, the consumer ignores them, and if the producer deletes elephants that the consumer was optionally expecting, then the consumer can create imaginary “pink” elephants on demand.

In practice, Forward compatibility is useful if you need to upgrade producer schemas before consumer schemas.

The presence or absence of optional fields is permitted for both Backward and Forward compatibility which leads to the next mode…

3. FULL Compatibility

In snakes and ladders you will inevitably go forward and backward.

(Source: Shutterstock)

Full compatibility is stricter than Backward or Forward compatibility, as schemas must be both Backward and Forward compatible, i.e., Producers or Consumers with the new fully compatible schema are able to write and read old and new data—backward and forward time travel! However, to achieve this, only the intersection of the Backward and Forward changes is allowed, i.e. add or delete optional fields. However, it does mean that you can upgrade Kafka producers and consumers in any order you like.

4. TRANSITIVE Compatibility

With BACKWARD_TRANSITIVE compatibility you could travel back to the start of the universe, the Big Bang!

(Source: Shutterstock)

During my experiments, I also tried both Backward and Forward Transitive compatibility, but the results appeared to be indistinguishable from the non-transitive results. What’s going on? Well, it turns out that Transitive compatibility requires that the changes are compatible not just with the immediately previous schema, but with all the previous schemas. As my tests were just checking compatibility, not actually changing the schemas, I wasn’t getting any different results for the Transitive tests. In practice, Karapace will check the proposed schema change against all “versions” of schemas for a subject, and it is likely that with more versions it will be harder to ensure Transitive compatibility over time. At this point, redesigning topics and consumer groups to isolate the changes may be required.

And the FULL_TRANSITIVE mode is even more strict, in terms of the changes that are allowed, but more general in terms of supporting both Backward and Forward compatibility—in theory you could travel back to the Big Bang, and forward to Milliways, the “Restaurant at the End of the Universe”, using this mode!

5. How to Avoid Time Travelling Paradoxes

If you go time traveling, try to avoid the Grandfather paradox and causal-loops

(Source: Shutterstock)

So, in practice when should you use which modes? In general, for long-lived systems, I would suggest using Transitive modes, as this will prevent any unexpected surprises. For consumers that need to replay data on topics back to the start, then BACKWARD_TRANSITIVE would probably be best. If you don’t have control over consumers, then FORWARD_TRANSITIVE should enable you to change your producers while not breaking any existing consumers.

6. What Else?

What else do I know from my time travels backward and forward with Karapace? Well, as well as Avro schemas, Karapace also supports Protobuf and JSON schemas. The default schema type is Avro, but if you want to use anything you need to specify it when you register the schema in Karapace with the “schemaType” field, as follows:

PROTOBUF:

1 | curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" -u karapaceuser:karapacepassword --data '{\"schemaType\": \"PROTOBUF\", "schema": etc}' karapaceURL:8085/subjects/protobuf-value/versions |

JSON:

1 | curl -X POST -H "Content-Type: application/vnd.schemaregistry.v1+json" -u karapaceuser:karapacepassword --data '{\"schemaType\": \"JSON\", "schema": etc}' karapaceURL:8085/subjects/protobuf-value/versions |

(There’s an example at https://www.karapace.io/quickstart)

In your Kafka producer and consumer code you’ll also need to configure different key and value serializers and deserializers, KafkaProtobufSerializer and KafkaProtobufDeserializer for Protobuf, and KafkaJsonSchemaSerializer and KafkaJsonSchemaDeserializer for JSON. For Kaka Streams applications, you will need to use an appropriate Avro SerDes, or a different SerDes if you want to use Protobuf or JSON schemas (KafkaProtobufSerde, KafkaJsonSchemaSerde). Currently none of the ProtoBuf or JSON Schema SerDes are available under an Apache 2.0 license, so will need to check out the license to see if it meets your requirements).

Which should you use? I haven’t used any of them in practice, but here’s a good comparison of Avro vs. Protobuf (generic, not Kafka-specific, however).

And a final hint—from someone who has been involved in R&D with XML based technologies including schemas for over 20 years (e.g., Open Grid Service Archictecture, Open Geospatial Consortium Sensor Web, and a Performance Modeling tool that used an XML meta-model, and ingested XML monitoring data, etc.). It’s all about the tools! XML was never intended for manual/human creation or use (it was far too verbose and complicated for humans). What made it a powerful technology at the time was the existence of sophisticated tools for schema/data creation, manipulation, validation, transformation etc., including programming language support. I think the same applies to this new generation of Kafka schema technologies—whichever you pick make sure you put some effort into researching, selecting, and testing your tool pipeline, as that will make or break your productivity and ability to use and evolve Kafka schemas over time in enterprise scale applications. Hopefully there will be new open source tools available to manage, view, and evolve Kafka schemas with visualization/GUI support in the near future (this would be an easy way to contribute to the open source community, as Karapace is truly open source—both the APIs and the source code).

However, for generic (non-Kafka specific) Avro, JSON, and Protobuf Schemas there are some options. There is a long list of JSON Schema tools. And some Protobuf tools (from 2020). There are a couple of commercial tools that look useful, including XMLSpy that has been around since XML (for JSON Schema and Avro and Protobuf), Hackolade for Avro, and IntelliJ for Protobuf.