(Source: Shutterstock)

“All is flux, nothing stays still.”

“You can not step twice into the same river; for other waters are ever flowing on to you.”

Heraclitus, 500 BCE

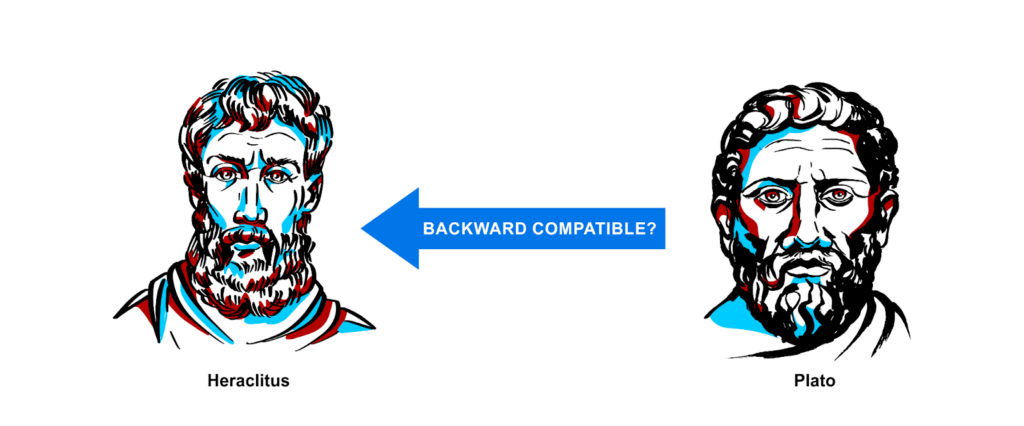

In previous parts of this blog series, we’ve seen how to introduce schemas into Apache Kafka® to enable smooth serialization and deserialization between producers and consumers, and prevent any unwanted changes in the data format. Plato believed that the forms don’t change, but that change is restricted to the realm of appearances, i.e. the physical world. But Heraclitus (quoted by Plato) believed that “change is inevitable” (and may have invented the concept of streams processing). So what happens when the unchangeable forms (schemas) meet the inevitability of change? Let’s dip our toes in the water and find out.

1. BACKWARD Compatibility Experiments

As we discovered in the last part of this series, the default compatibility level is “BACKWARD”, and you can’t change the schema from a platonic solid to a simple string with this level of compatibility. Let’s try some experiments to determine what can and can’t be changed, using the Karapace REST API and the curl command.

First, let’s check that the compatibility level is still BACKWARD:

curl -X GET -u karapaceuser:karapacepassword karapaceURL:8085/config

{“compatibilityLevel”: “BACKWARD”}

Now let’s create the initial PlatonicSolid schema for a new topic “evolution”:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/subjects/evolution-value/versions

{“id”: 8}

This apparently worked ok, as any schema is allowed as the first version for a given subject (evolution-value in this case). The result tells us that the schema has been registered successfully and has an ID of 8 (Note: This is not the 1st schema I’ve created in this instance of Karapace).

Let’s double check to see what the latest schema is for evolution-value:

curl -X GET -u karapaceuser:karapacepassword karapaceURL:8085/subjects/evolution-value/versions/latest | jq

{

“id”: 8,

“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”,

“subject”: “evolution-value”,

“version”: 1

}

This confirms that the schema with ID 8 is the latest version, shows us what the schema is exactly, and also tells us that the version number of the latest schema is 1, i.e. only the initial schema exists for this subject, no schema changes have been made yet.

For simplicity, rather than trying to register different schemas (so we don’t accidentally change the schema), I used a different REST API which checks for compatibility only (but unfortunately doesn’t provide any explanation—just true or false).

First, let’s check that this works and see if the current schema is compatible.

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: true}

Yes it is. For the following examples, I’ve highlighted the changes with different colours. The colour codes are:

- Green for addition

- Red for deletions

- Orange for changes

- Blue for optional additions

- Pink for deleting optional fields

For the first change, let’s try adding a new field without a default:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”new\”, \”type\”: \”int\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: false}

This failed.

Next, let’s try removing a type from a union (recall that unions in Avro can have multiple fields, including “null” which enables an optional field):

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

The actual call is therefore:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: false}

This also failed.

Now let’s try changing a field name (faces to face):

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”face\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: false}

That’s also a no. Let’s try deleting a compulsory field (faces):

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

The actual call is:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: true}

This is our first success, so deleting a field is backward compatible. How about deleting an optional field (volume)?:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

The actual call is:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”vertices\”, \”type\”: \”int\”}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’

karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: true}

So, deleting compulsory or optional fields are both backward compatible. What else can we try? How about adding a new optional type to a union?

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”name\”: \”volume\”, \”type\”: [\”float\”,

\”int\”,

\”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’

>karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: true}

This is also backward compatible. Perhaps adding a whole new optional field (length, a union) will also work?

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”},

{\”name\”: \”length\”, \”type\”: [\”float\”, \”null\”]},

{\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’

karapaceURL:8085/compatibility/subjects/evolution-value/versions/latest

{“is_compatible”: false}

Well, that’s surprising! Let’s try actually registering the new schema so we can get an error message to see what’s going on:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”},

{\”name\”: \”length\”, \”type\”: [\”null\”, \”float\”]},

{\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/subjects/evolution-value/versions | jq

{

“error_code”: 409,

“message”: “Incompatible schema, compatibility_mode=BACKWARD length”

}

This error message is not very helpful, and you only get a report on the first problem if there are multiple issues (based on other experiments I conducted).

However, further reading resulted in the discovery that, in Avro, to make the field actually optional, you need an explicit default value added to the union as follows:

curl -X POST -H “Content-Type: application/vnd.schemaregistry.v1+json” -u karapaceuser:karapacepassword –data ‘{“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”},{\”name\”: \”length\”, \”type\”: [\”null\”, \”float\”], \”default\”: null }, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”}’ karapaceURL:8085/subjects/evolution-value/versions | jq

{

“id”: 9

}

Whoops, given that this succeeded and I was still using the call to register schemas, not just check for compatibility, I accidentally registered a new schema, with a new ID of 9. Let’s double check to see what the latest schema is:

curl -X GET -u karapaceuser:karapacepassword karapaceURL:8085/subjects/evolution-value/versions/latest | jq

{

“id”: 9,

“schema”: “{\”fields\”: [{\”name\”: \”figure\”, \”type\”: \”string\”}, {\”name\”: \”faces\”, \”type\”: \”int\”}, {\”default\”: null, \”name\”: \”length\”, \”type\”: [\”null\”, \”float\”]}, {\”name\”: \”volume\”, \”type\”: [\”float\”, \”null\”]}], \”name\”: \”PlatonicSolid\”, \”namespace\”: \”example.avro\”, \”type\”: \”record\”}”,

> “subject”: “evolution-value”, “version”: 2

}

This call confirms that we have changed the schema to a new schema with an ID of 9, and that this subject (evolution-value) has 2 different schemas, with the latest being version 2.

This clarifies the relationship between subjects, schema IDs, and versions which I was a bit confused about. Each unique schema has a unique ID, which is reused across subjects. Each subject has 1 or more schema versions, each schema version has a different ID corresponding to the schema ID of that version, i.e. Karapace maintains an “array” of schemas, with each element containing the schema ID, and the index is the version number (starting from 1). So, for our example, the schema version [1] contains schema ID 8, and schema version [2] contains schema ID 9.

2. Summary and Analysis

Let’s summarize what we’ve discovered in a table (note that these tests were not exhaustive, and other changes are possible, this blog covers some more including type changes):

| Change Type | Backward Compatible? |

|---|---|

| Add field – no default | No |

| Delete type from union | No |

| Change field name or type | No |

| Delete field | Yes |

| Delete optional field | Yes |

| Add new value to union field | Yes |

| Add new optional field with default value | Yes |

What does BACKWARD compatibility actually mean? One of the many advantages of open source software is that you can inspect the code yourself. The Karapace Avro compatibility checking code says the following about BACKWARD compatibility:

Backward compatibility: A new schema is backward compatible if it can be used to read the data written in the previous schema.

When does this situation occur in Kafka? The most obvious case is one of Kafka’s “super powers”—the ability of consumers to replay/reprocess events multiple times. So, to be backward compatible, a change to a consumer schema must allow the consumer to read any previous records written by the previous schema to a topic.

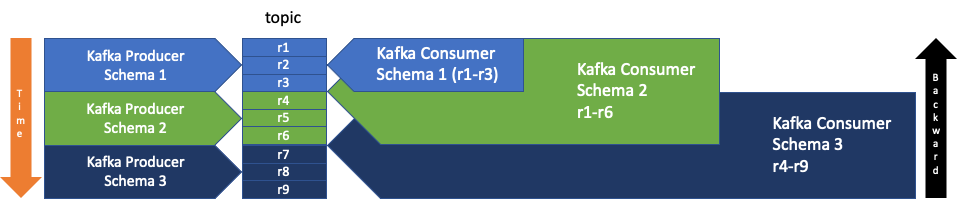

In the following diagram (time flows forward down the page, backward up the page), there are 3 schema versions for a topic, Schema 1, Schema 2, and Schema 3. Schema n is only backward compatible with schema n-1 (and n, itself). Records r1-r3 are written by a producer with Schema 1, and can be read by a consumer with Schema 1. Records r4-r6 are written by a producer with Schema 2, which is backward compatible with Schema 1. Records r1-r6 can therefore all be read by a consumer with Schema 2. Records r7-r9 are written by a new Schema 3 which is only backward compatible with Schema 2 (not Schema 1). Only records r4-r9 can therefore be read by a consumer with Schema 3.

BACKWARD Compatibility

For each backward compatible schema change, there must be no changes that would break the consumer logic and Avro data type. What does the table above tell us about permitted changes? Basically that you can delete a compulsory or optional field, or add an optional field. This table summarizes the compatible changes in terms of the previous write schema and data produced by the producer, the consumer read schema (new backward compatible schema) and the data object created as a result of successful deseriazation, and fields that are present/optional or absent:

| Data/Write

Schema |

Read

Schema |

Constructed Data Object | Backward Compatible? |

| Present | Present | Present | Yes |

| Present | Abscent | Absent | Yes |

| Absent | Optional | Present

(default value) |

Yes |

| Absent | Present | Failure | No |

How does this work? If the new schema sees a field that it doesn’t know about (the old data contains the field, but it’s been deleted in the new schema) then it will simply ignore it (2nd row). And if the new schema is now expecting an (optional) field that is not present in the old data, it simply creates it with a default value (3rd row). If the data and schemas are unchanged (1st row) then everything just works as normal, and if a field is compulsory in the read schema but missing from the data (4th row) then the change is incompatible and an exception will result.

The simplified consumer logic is therefore: “If I see something I don’t know about then ignore it; if I am expecting something and I don’t see it, then pretend that it exists!”

For example, if the new schema is not expecting an elephant, then it ignores any elephants present:

There is no elephant

(Source: Shutterstock)

But if the new schema is expecting an elephant (with a default value of, say, “pink”), and there is none, then imagine one!

You are only limited by your imagination!

(Source: Shutterstock)

Implications for Kafka Producers and Consumers

So now we know what changes are backward compatible and allowed by the open source schema registry, Karapace using curl commands (the same behaviour is to be expected if Kafka producers attempt to change a schema and automatic schema registration, the default, is enabled). But what, if anything has actually changed from the perspective of the Kafka producers and consumers? (Remember, Kafka itself doesn’t know anything about schemas, and Karapace is only used by “convention” by cooperating producers and consumers). Well, nothing has really changed. Sure, if they ask Karapace for the latest schema for a subject they will now get the ID of the latest schema version. However, unless we actually change the schemas and data object code for producers/consumers they will happily continue to write and read data using the previous schema (which has a different ID to the new schema, and only that ID is passed between them as part of the record structure). If you want to actually introduce a backward compatible schema change to the Kafka producers and consumers, you will need to update the consumer schema and Avro data object code before (or at the same time if that is feasible) the same changes are made to producers (writing to the same topic).

If we want to introduce a change that is not backward compatible, one solution is to keep the existing topic and old schema, and create a new topic for the new schema.

But what happens if we want to ensure that schemas are backward compatible across multiple changes so we can continue to read all of the records present on a topic back to the start as well as accepting new records written by new schemas? Presumably, as Karapace keeps track of all schema versions for a subject there must be a reason? Also, what other compatibility modes are possible? If backward changes are permitted, how about forward changes? Let’s find out in the next blog.

Note: If you are only using the Kafka Streams API, then you can (in theory) stop reading now —you know everything there is to know about Streams compatibility, as the only compatibility mode supported by Streams is BACKWARD (Streams applications always need to read previous data). Actually, FULL and BACKWARD_TRANSITIVE modes are also supported (as they are “stronger” than BACKWARD), so maybe the next blog is still worth reading!

Philosophical Postscript

(Source: Shutterstock)

In order to be really “backward compatible”, we should give Plato and Heraclitus the last word.

Why has a pink elephant replaced my perfect Platonic Solids? And who says that unchangeable Schemas can change (they are obviously uneducated barbarians)!

Plato lived after Heraclitus (and quoted him), so let’s see if Plato is backward compatible with Heraclitus. For Plato, the immutable forms, and the changeable physical world were both essentials. However, Heraclitus only believed that everything changed. As Heraclitus didn’t believe in anything being immutable, and forms are essential in Plato, then Plato isn’t backward compatible with Heraclitus. However, maybe Heraclitus had a time machine (more of that in the next blog), so could Heraclitus be backward compatible with Plato instead? Yes, as Heraclitus would be more than happy to ignore Plato’s forms.

And to answer Plato’s complaint, the pink elephant is imaginary, so it’s just an “idea” of a pink elephant, so it obviously inhabits the realm of the forms/schemas. And as we discovered, in Karapace, schemas actually are immutable. If a backward compatible schema change is permitted then a new schema pops into existence as the latest schema version for a subject. So Plato is happy (schemas are immutable), and Heraclitus is happy (there can be multiple changes of schema versions for a subject).

Follow the Karapace Series

- Part 1—Apache Avro Introduction with Platonic Solids

- Part 2—Apache Avro IDL, NOAA Tidal Example, POJOs, and Logical Types

- Part 3—Introduction, Kafka Avro Java Producer and Consumer Example

- Part 4—Auto Register Schemas

- Part 5 —Schema Evolution and Backward Compatibility

- Part 6 —Forward, Transitive, and Full Schema Compatibility

Trial our Managed Platforms Including Apache Kafka Today