Overview

Elasticsearch is a search and analytics engine built with the Apache Lucene search library. It extends the search functionality of Lucene by providing a distributed, horizontally scalable, and highly available search and analytics platform. Some common use cases for Elasticsearch include log analysis, full-text search, application performance monitoring, SIEM, etc.

OpenSearch is an open source search and analytics suite derived from Elasticsearch 7.10.2 and Kibana 7.10.2. OpenSearch is Apache 2.0 licensed and community driven. OpenSearch is also built with Apache Lucene and has many of the core features of Elasticsearch. We will review some of the building blocks that make Elasticsearch and OpenSearch some of the leading analytics and search engines. The architecture and functionality discussed in this blog is common for both Elasticsearch and OpenSearch.

Elasticsearch Architecture

Lucene

Lucene is an open source, high-performance search library built with Java, and acts as the basis of some of the popular search engines such as Apache Solr, Apache Nutch, OpenSearch, and Elasticsearch. Lucene has been around for more than 20 years and is a very mature library maintained by an open source project under the governance of The Apache Foundation.

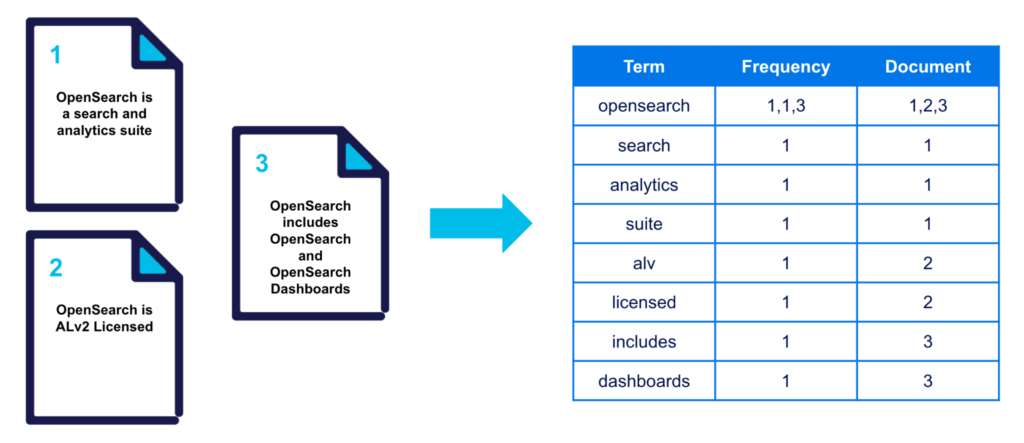

At the heart of Lucene is the inverted search index, which makes it possible to achieve incredibly fast search results. The inverted search index provides a mapping of terms to documents that contain those terms. During search, it is quicker to look up a term in the sorted term dictionary and retrieve the list of matching documents. Lucene does support storing several types of information such as numbers, strings, and text fields. Lucene has a rich search interface with support for natural language searches, wildcard searches, fuzzy, and proximity searches.

Elasticsearch Functionality

Lucene is a search library but not a scalable search engine. Elasticsearch uses Lucene at the core for search but has built many additional capabilities on top of Lucene to make it a full-featured search and analytics engine. Below is a high level summary of some of those key functionalities.

Distributed Framework

Data can be stored and processed across a collection of nodes within a cluster framework. Elasticsearch takes care of distributing the workload and data and manages the Elasticsearch nodes to maintain cluster health.

Scalability

With Java heap size limitations, vertically scaling a node is only possible to a certain extent. To be able to manage petabytes of data, horizontal scalability is required. Elasticsearch provides near-linear scalability simply by adding additional nodes to the cluster.

High Availability

With data replication and maintaining data across nodes in the cluster, Elasticsearch can handle node failures with no data loss or downtime. A remote cluster can be set up for replication in a different zone or region for additional failover.

Database-Like Capabilities

Elasticsearch being a document store has some built-in database capabilities such as unique document id, document updates, document versioning, and document deletes.

Faceting/Aggregations

Faceting/aggregations allow Elasticsearch to function as an analytics engine. With aggregations, you are able to gain insights into the data and detect trends, outliers, and patterns in your data.

User Interface

Elasticsearch provides a REST API for cluster management and to ingest and access the data in the cluster. A powerful query DSL is available to perform multi-dimensional queries. Elasticsearch also supports a SQL API for SQL-like queries of the data.

Security and Access Control

Elasticsearch secures cluster communication and client-cluster communication with TLS and certificates. Cluster administration and access to cluster data are controlled through role-based access control (RBAC). Access control can be configured at the cluster, index, and document level.

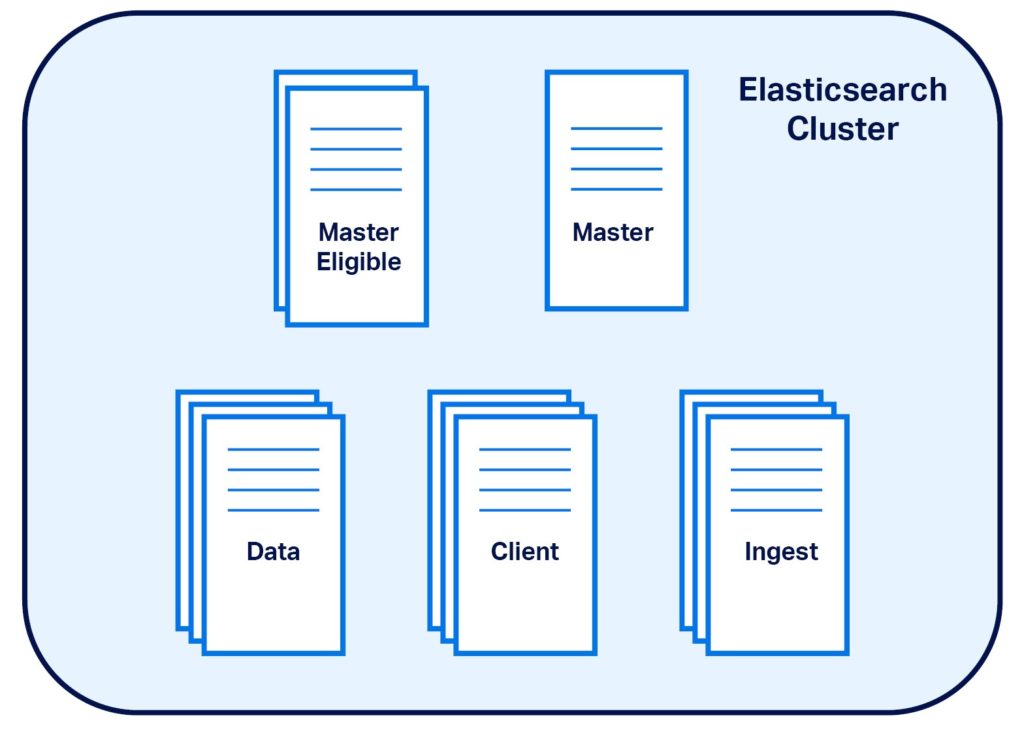

Elasticsearch Cluster

An Elasticsearch cluster is a distributed collection of nodes that each perform one or more cluster operations. The cluster is horizontally scalable, and adding additional nodes to the cluster allows the cluster capacity to increase linearly while maintaining similar performance. Nodes in the cluster can be differentiated based on the specific type of operations that they perform. Although a node can perform any or all cluster operations, such a configuration could negatively impact the stability of the cluster. The standard practice is to designate different sets of nodes for different cluster functions.

Master Node

Each cluster has a single master node at any point in time and its responsibilities include maintaining the health and state of the cluster. They are typically lightweight as they don’t process any data. Master nodes function as a coordinator for creating, deleting, managing indices, allocating indices and the underlying shards to the appropriate nodes in the cluster.

Master-Eligible Nodes

Master-eligible nodes are the ones that are candidates for being a master node. A cluster is recommended to be built with more than one master-eligible node, and the number of master-eligible nodes should be an odd number to handle cluster partitions.

Data Nodes

Data nodes hold the actual index data and handle ingestion, search, and aggregation of data. Data node operations are CPU- and memory-intensive and require sufficient resources to handle the data load. The maximum suggested heap size for any Elasticsearch/OpenSearch node is 30GB. Instead of vertical scaling, horizontal scaling is recommended to increase the capacity of the cluster.

Client Nodes

Client nodes act as a gateway to the cluster and help load balance the incoming ingest and search requests. This is particularly useful for search use cases. Each search has a fetch and reduce phase. During the reduce phase, results from the individual shards need to be put together. If a data node is under stress and if it acts as a client node as well, then not only the query results for shards residing on that node will be affected but also queries that are coordinated through that node.

Ingest Nodes

Ingest nodes can be configured to pre-process data before it gets ingested. As some of the processors such as the grok processor can be resource-intensive, dedicating separate nodes for the ingest pipeline is beneficial as search operations will not be impacted by ingest processing.

Data Organization

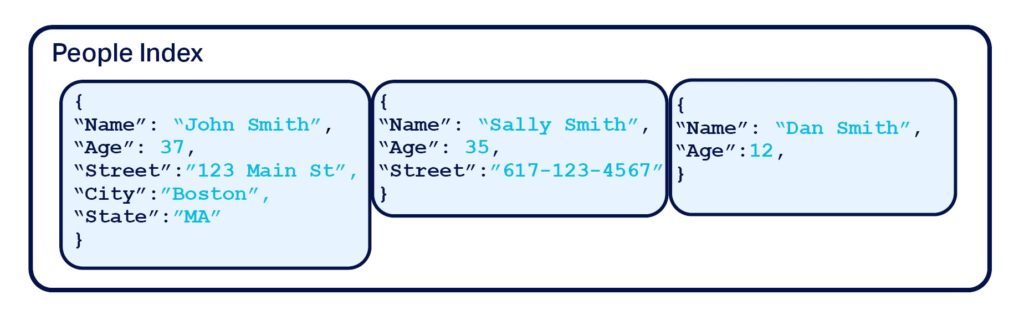

In Elasticsearch and OpenSearch, data is organized into indices that contain one or more documents. Documents in turn have one or more fields.

Index

An index is a logical collection of documents. The index is the basic unit by which end users manage their data. An index is akin to a database table. Documents in an index can be anything, like a paragraph from a book, a logline, a tweet, or weather data for a city, but typically similar documents are grouped into the same index. Although Lucene also organizes data by index, an Elasticsearch/Opensearch index is not directly mapped to a Lucene index. Instead, each index is broken down into multiple sub-indices called shards. Shards are directly mapped Lucene indices.

Document

Documents are JSON structures that hold a collection of fields and their values. Each document maps directly to the underlying Lucene document. Elasticsearch/OpenSearch does add some additional metadata fields such as _id, _source, _version, etc. to the documents to provide enhanced functionality. Although an index could have documents with entirely different content, for efficiency purposes, you would typically store similar documents in the same index.

As an example, the following customer index has documents about a store’s customers. Though the documents will all have similar content, some fields may only appear only in certain documents.

Fields

Fields are key-value pairs that make up a document. Fields can be of several different types such as numbers, text, keywords, geo points, etc. The type of field determines how it will be processed and stored in Elasticsearch/OpenSearch and how it can be searched and accessed. We will review some of the most common data types in a later section.

Internal Data Structures

Shards

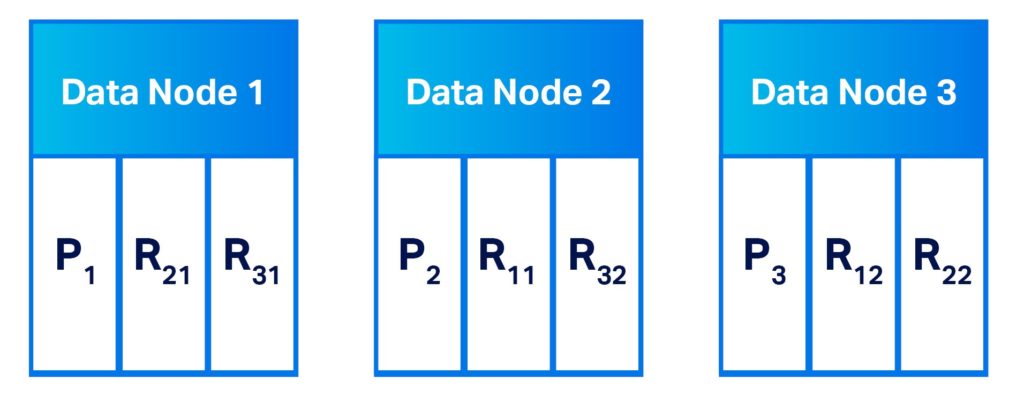

To distribute an index’s data across multiple nodes to achieve scalability and reliability, Elasticsearch/OpenSearch can break up an index into multiple smaller units called shards. Each shard maps to an underlying Lucene unit called index. In other words, each index is mapped to one or more Lucene indices aka shards. These shards can then be distributed across the cluster to improve processing speeds and guard against node failures.

Primary and Replica Shards

The number of shards per index is a configurable parameter and has major implications on the performance of the cluster. Search operations in Elasticsearch and OpenSearch are performed at the shard level and having multiple shards help with increasing the search speed as the operation can be distributed across multiple nodes.

You can break each index into as many shards as there are data nodes but having too many shards has its own issues. Increasing the number of shards increases the cluster state information which means more resources will be needed to manage the shard state across cluster nodes. General practice is to keep the shard size between 30 and 50GB and this will dictate how many shards you would configure for your index

To guard against data loss, Elasticsearch/OpenSearch allows configuring replicas for your data. This is an index-level setting and can be changed at any time. As the index is stored in shards, configuring replicas would cause replica shards to be created and stored. Elasticsearch/OpenSearch would try to allocate replica shards to different nodes other than where the primary shard resides (if zone awareness is configured, it would try to place the copies in an alternate zone).

With replica shards, a node failure doesn’t lead to data loss or a data unavailability situation. Replica shards are not free and come at their own price. Storing the replica would require additional storage space and can slow down indexing operations as the data needs to be indexed to both primary and replica shards. For this reason, replicas are usually turned off during heavy indexing operations.

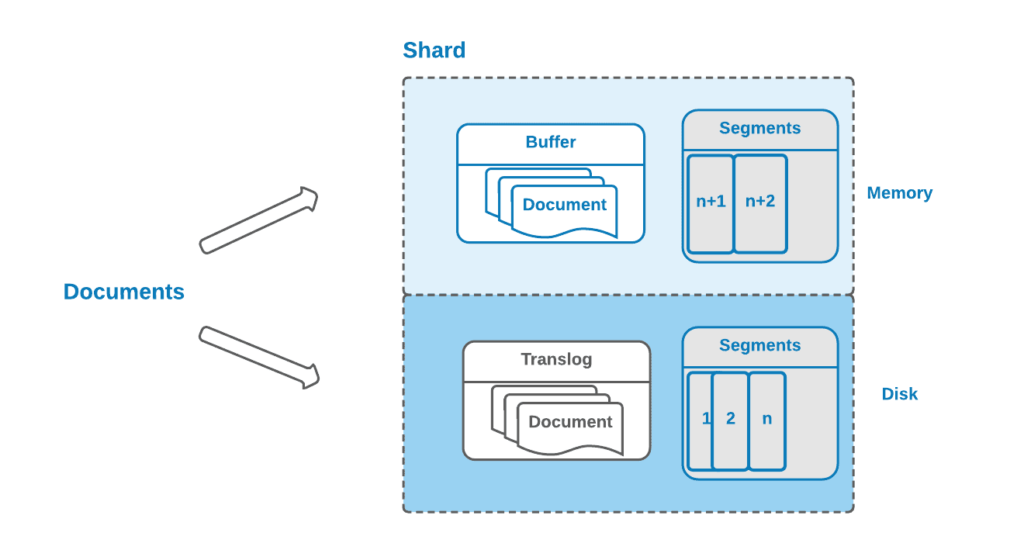

Segments

A Lucene index/Elasticsearch shard is subdivided into smaller units called Lucene segments. Segments are file system structures that get created as data is ingested into an index. Search operations are performed at a segment level.

Segment Merging

Once a segment is created, its contents cannot be modified. Deleting a document is achieved by simply marking the document as deleted. Similarly updating a document requires deleting the old document and creating an updated document.

To free up space from the deleted documents, Elasticsearch/OpenSearch merges the segments to create new segments by expunging deleted documents. Merging also helps to combine smaller segments into larger segments as smaller segments have poor search performance.

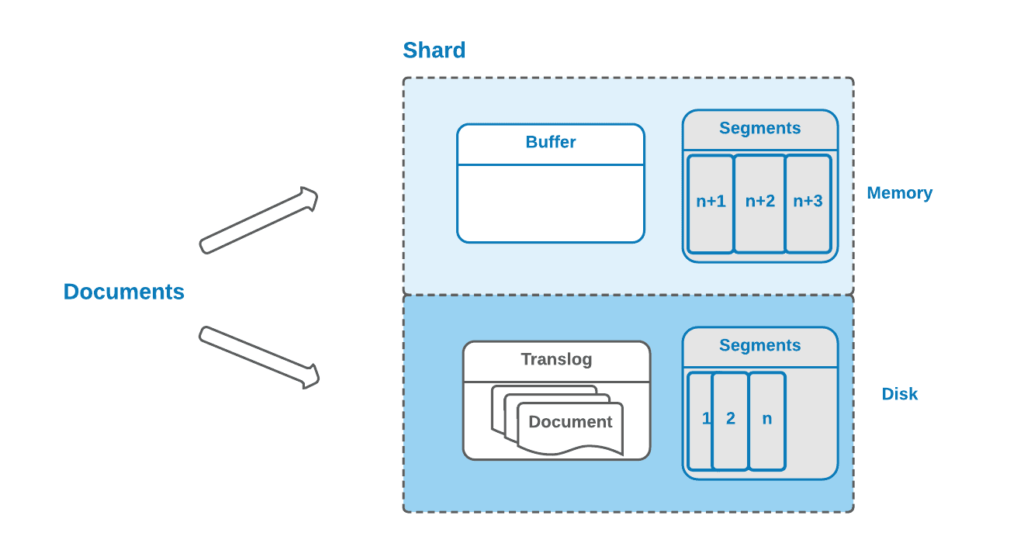

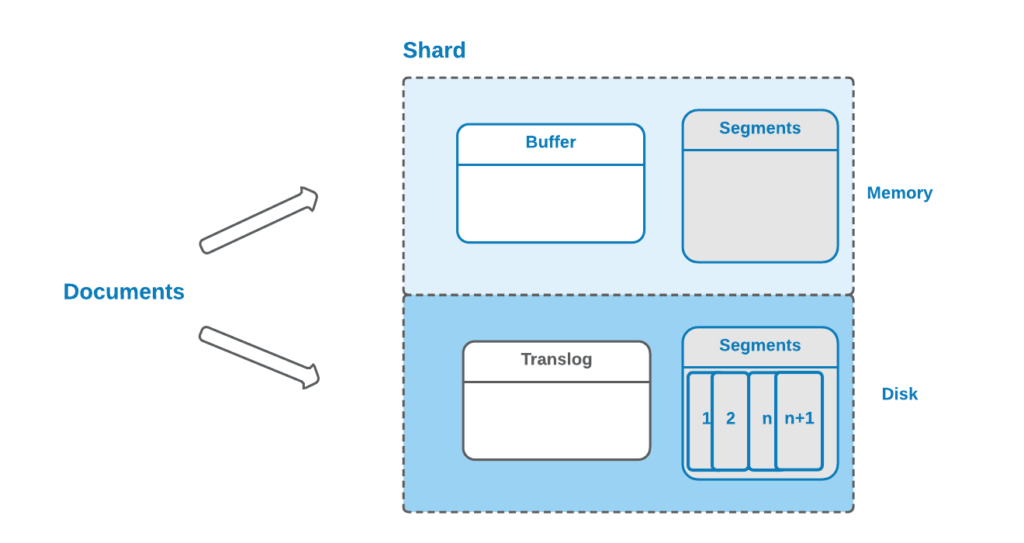

Translog

When data is written to a Lucene index, it is held in memory and committed to the disk on reaching a certain threshold. This avoids frequent writes to the disk thereby improving write performance and avoids creating many small shards. The downside is that any uncommitted writes to Lucene will be lost on a failure. To overcome a potential data loss, Elasticsearch/OpenSearch writes all uncommitted changes to a transaction log known as translog. In the event of a failure, data can be replayed from the translog. Translog size, sync interval, and durability can be set at the index level.

Data in the transaction log is not searchable immediately unless a refresh operation is performed. By default, Elasticsearch/OpenSearch does an index refresh every second. A refresh causes the data in the translog to be converted into an in-memory segment which then becomes searchable. Refresh does not commit the data to make it durable.

Document Indexing

Input data to Elasticsearch/OpenSearch is analyzed and tokenized before it gets stored. Typically only the analyzed tokens called the terms are stored by the Lucene library. Elasticsearch/OpenSearch also stores the original document as received in a special field called the _source. Although it consumes additional storage space, _source field is critical in providing document update functionality and is also required for reindex operations.

Data Analysis

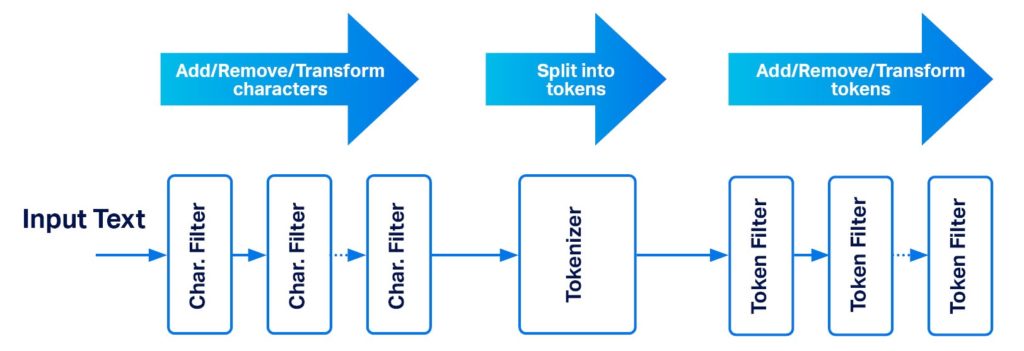

Analysis is the process of converting the input field into a term or a sequence of terms which then gets stored in the Lucene inverted index. This is achieved with the help of analyzers and normalizers. Pre-built analyzers and normalizers are available for common use cases.

For instance, the standard analyzer breaks text into grammar-based tokens and the whitespace analyzer breaks text into terms based on whitespace. An analyzer is a combination of character filters, tokenizers, and token filters. Custom analyzers can be built using the appropriate set of filters and tokenizers.

Character Filters

Character filters pre-process the input text before forwarding it to the tokenizer. They work by adding, removing, or changing characters in the input text. For example, the built-in HTML strip character filter strips HTML elements and decodes HTML entities. Multiple character filters can be specified and they will be applied in order.

Tokenizers

Tokenizers convert the input stream of characters into tokens based on certain criteria. For instance, the standard tokenizer breaks text into tokens based on word boundaries. It will also remove punctuation. The whitespace tokenizer breaks text into tokens at whitespaces

Token Filters

Token filters post-process the tokens from the tokenizer. Tokens can be added, removed, or modified. For example, the ASCII folding filter will convert Unicode characters to the closest ASCII equivalent. The stemming token filter applies stemming rules to convert words to their root form.

Normalizer

Normalizers are similar to analyzers but a tokenizer cannot be specified as they only produce a single token. They work with character filters and token filters that work on a character basis

Field Data Types

The data type associated with a field defines how the field will be indexed and stored. Although Elasticsearch/OpenSearch doesn’t require a schema to be defined for an index, it is best practice to define the schema with index mappings. Index mapping defines the expected fields and what data type they should be mapped to. If a data type is not explicitly defined, Elasticsearch/OpenSearch will try to guess the appropriate field type based on the contents. Some of the common field data types are reviewed below.

Text Type

The text type is primarily used to index any human-generated text such as tweets, social media posts, book contents, product descriptions, etc. Text type causes the input data to be analyzed by the specified analyzer. If no analyzer is specified, then the standard analyzer will be used. Text fields are particularly useful for performing phrase queries, fuzzy queries, etc.

Keyword Type

Keyword type is typically used for indexing structured content such as names, ids, ISBN, categories, etc. Keyword fields are particularly useful for sorting, aggregations, and running scripts. To enable this, they are stored in a columnar format by default. Only a normalizer can be specified for a keyword as it is supposed to generate a single token.

Numeric Type

Numeric types of integers, unsigned integers, floats, etc. are supported. When choosing a numeric type, the smallest type that could fit the input range should be chosen to conserve storage space. BKD trees are used to store numeric fields.

Geo Point Type

Geo point is used to represent latitude and longitude data. With geo point, queries that rely on location, distance can be performed. BKD fields are used to store geo points.

Inverted Index

Lucene and in turn Elasticsearch/OpenSearch are known for their ability in providing blazingly fast search results. Such speeds are achieved by using an inverted index as the basis for document mapping. An inverted index contains a map of terms to the associated documents in the index. Terms are produced during the ingestion process by the document analyzers/normalizers which are then updated in the inverted index. Adding or modifying a document causes the inverted index to be updated and this incurs a one-time cost.

Term Dictionary

Term dictionary is the alphabetically sorted list of terms in the index and points to the posting list. Term dictionary is stored in the disk and updated as documents are added and modified. Terms are the token outputs from the analyzers as they process the input stream.

A simplified version of the terms dictionary has the following fields:

- Term: Term produced by the analyzer/normalizer

- Document Count: Count of documents that contain the term. Required for scoring.

- Frequencies: Number of times the term appeared in the documents. Required for scoring.

- Positions: Position at which the term appears in the field. Required to support phrase and proximity queries.

- Offsets: Character offsets to the original text. Required for providing faster search highlighting.

Term Index

Term dictionary contains a sorted list of terms in the index; this can get quite large for high-cardinality fields. For example, consider a field that stores a person’s name. As the number of unique names can be large, keeping all those terms in memory could be prohibitively expensive. To avoid such a scenario, Elasticsearch/OpenSearch uses a term index which is a prefix tree. The leaves of the tree point to the appropriate block of the term dictionary stored in the disk. During a search, the prefix for the term will be looked up in the term index first to find the actual term dictionary block that contains the sections of terms that match the prefix. The block is then loaded into memory to look up the actual term within the block.

Document Searching

Elasticsearch/OpenSearch runs a query and fetch with a distributed search algorithm to run search queries.

Query Phase

During the query phase, Elasticsearch/OpenSearch sends the query to all the shards associated with the index. Search requests can be sent to a primary or a replica shard. If n results are requested by the query, then each shard will run the search locally and return n results. The results would only contain the document ids, scores, and other relevant metadata but not the actual document.

Fetch Phase

The node that is coordinating the search would order the query results from all the shards and put together the final list of n results. Then it would perform a fetch during which the actual documents would be retrieved and returned.

Document Scoring

Document scoring is achieved by the similarity module. The default similarity module is BM25 similarity. It is an improvement over the TF-IDF algorithm widely used in natural language processing. In particular, BM25 takes into account the field length and applies term frequency saturation.

TF: How frequently the given term appears in the field. The higher the number of times the term appears in the document, the more likely the document is to be relevant.

IDF: How frequently the term appears across all documents in the index. If it appears more commonly, then the term is less relevant. Common words such as “the” could appear many times in an index and a match against them is less important.

Norm: Length normalization. For the same term frequency, a shorter field is more relevant than a longer field. The same term appearing twice in a field with a length of 100 is more important than the term appearing twice in a field with a length of 1000.

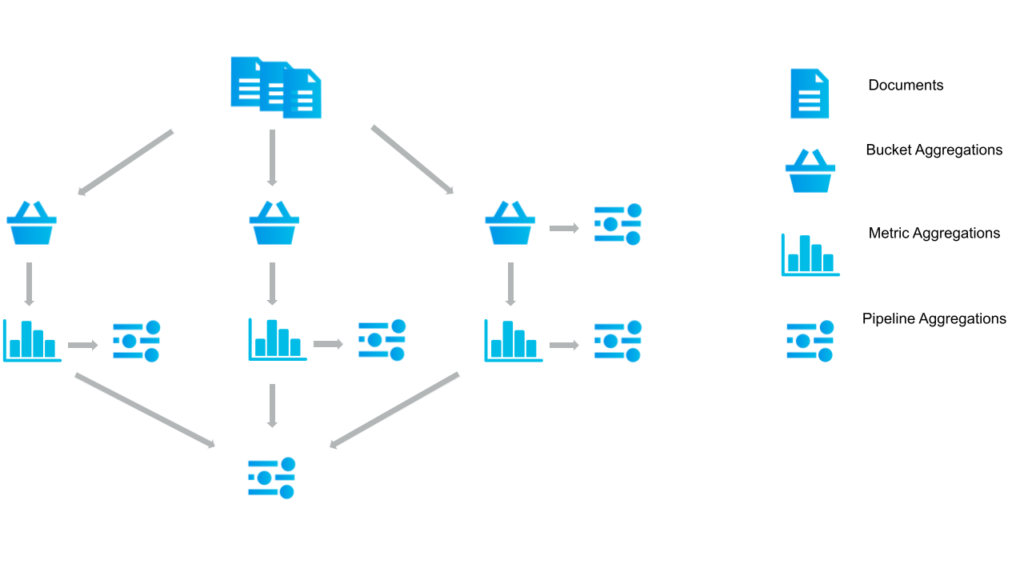

Aggregations

Elasticsearch and OpenSearch are not only search engines; they also have built-in, advanced analytics capabilities. Aggregations let you filter and categorize your documents, calculate metrics, and build aggregation pipelines by combining multiple aggregations.

Metrics Aggregations

Metrics aggregations let you calculate metrics on the values generated from the documents. The values could be specific fields in the documents being aggregated or generated dynamically through scripts. They can be included as sub-aggregations to bucket aggregations and will produce metrics per aggregated bucket.

Numeric Metric Aggregations

Numeric metric aggregations produce numeric metrics such as max, min, sum, and average values. They can be further classified as single-value or multi-value metric aggregations.

Single value aggregations produce a single metric. An example would be the sum aggregation which produces a single sum of a specific field from the selected documents.

Example: Sum of all orders for a bookstore

| GET book_store_orders/_search{ “size”: 0, “aggs”: { “total_orders”: { “sum”: { “field”: “order_price” } } }} |

Multi-value metric aggregations produce multiple metrics based on specified fields in the aggregated documents. For example, the extended_stats aggregation produces stats such as sum, ave, max, min, variance, and standard deviation metrics

Non-Numeric Metric Aggregation

Some metric aggregations do produce output that are non-numeric. A good example is the Top hits aggregation. Used as a sub-aggregation, it produces top matching documents per bucket. Top matching is defined by a sort order and defaults to the search score.

Example: Orders with highest order price by category

| GET book_store_orders/_search{ “size”: 0, “aggs”: { “category”: { “terms”: { “field”: “category.keyword”, “size”: 5 }, “aggs”: { “top_orders_by_price”: { “top_hits”: { “size”: 10, “sort”: [ { “order_price”: { “order”: “desc” } } ] } } } } }} |

Bucket Aggregations

Bucket aggregations categorize the matching set of documents into buckets based on a bucketing criteria. Bucketing criteria could be based on unique values of a field (terms aggregation), date range (date histogram) aggregation, etc. Bucket aggregations can be used to paginate all buckets (composite aggregation), provide faceting, or can act as inputs to other metric aggregations.

Example: Order price stats by category

| GET book_store_orders/_search{ “size”: 0, “aggs”: { “sale_by_category”: { “terms”: { “field”: “category.keyword”, “size”: 10 }, “aggs”: { “sales_stats”: { “stats”: { “field”: “order_price” } } } } }} |

Pipeline Aggregations

Pipeline aggregations can be used to compute metrics and they act on the output of other aggregations making it possible to build a chain of aggregations. Pipeline aggregations can be further categorized as parent and sibling pipeline aggregations. Parent pipeline aggregations compute new aggregations based on the output of the parent aggregation. Sibling pipeline aggregations compute new aggregations based on output from one or more sibling aggregations. Pipeline aggregations use bucket path syntax to identify the parent or sibling aggregation which has the necessary input parameters.

Example: Max order price by category and overall

| GET book_store_orders/_search{ “size”: 0, “aggs”: { “sale_by_category”: { “terms”: {“field”: “category.keyword”}, “aggs”: { “max_order_price”: { “max”: {“field”: “order_price”} } } }, “max_order_price_across_categories”: { “max_bucket”: { “buckets_path”: “sale_by_category>max_order_price” } } }} |

Conclusion

In this blog, we reviewed OpenSearch and Elasticsearch and the architecture behind them.

Both Elasticsearch and OpenSearch are great tools that can be utilized to solve your organization’s search and analytics data needs. Both can handle massive amounts of data, are highly scalable, and can support millisecond response times. Architected to handle node and data center failures, they provide high availability and reliability for your production workload.

Elasticsearch and OpenSearch have a mature ecosystem with many different tools such as Logstash (for the ELK Stack), Beats, REST clients, Kibana, and OpenSearch Dashboards to ingest and interact with your data. They can be deployed to handle several different use cases such as log analysis, data analytics, document search, SIEM, performance monitoring, and anomaly detection.

If you need help designing, hosting, and supporting your data cluster, we do that at Instaclustr. We are a full lifecycle company that can help with your open source data needs. Get started with a free trial of our Managed Platform today.

Transparent, fair, and flexible pricing for your data infrastructure: See Instaclustr Pricing Here