1. Introduction

Redis™ is an in-memory database with common use cases such as caching, leaderboards, and streaming. As with most databases, the data inside Redis can grow and grow, and learning how to properly manage the growth of that data is a key concern for database engineers today.

Redis has a few options when it comes to scaling, and Instaclustr can help with all of them. This blog will cover scaling with Redis—both vertically and horizontally, options that Instaclustr offers, and include some best practices for Redis scaling.

2. What is Scaling?

Scaling in its most simple form is making your Redis cluster bigger. However, before deciding on whether to scale, it is worth examining if there are other things you can do to reduce the amount of data that you need to store. For example, if you are using Redis as a cache, you can look closely at the Time to Live (TTL) of your keys. Redis can set a TTL in seconds or milliseconds and then it can remove the key from your database. This could reduce the size of your database and eliminate the need for scaling. Another option could be to look at the data types that you are using within Redis and modifying them to be more efficient.

If you have decided that scaling is what is needed for your use case, then either horizontal or vertical scaling can be done within Redis. Horizontal scaling can be considered “scaling out”. In practice this means adding additional servers to your Redis cluster. Vertical scaling can be considered “scaling up”. This means increasing the resources on the existing servers.

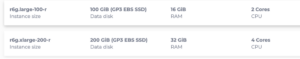

As an example, let’s say we have a 3-node cluster in AWS (Amazon Web Services) with no replicas (this is not recommended in the real world, but it works for example purposes). This cluster is running on an AWS node size, r6g.large, it has 100 GiB storage, 2 cores, and 16 GiB RAM.

If we decided to scale this cluster horizontally, we would look to add more of the same size instances to this cluster, like:

If we decided to scale this cluster vertically then we would keep the three initial nodes and increase the hardware on those instances, for example, taking them to a r6g.xlarge with 200 GiB storage, 4 cores, and 32 GiB of RAM:

3. Scaling Redis Vertically

As demonstrated above, scaling vertically means increasing the hardware on the existing instances. If Redis is running on a single node to start with, vertical scaling would usually be the first consideration. Things to consider when vertical scaling are:

- Limits of hardware: at some point an instance will reach a point when it cannot have any more resources added to it.

- Costs: will it be cheaper to add smaller instances, or stay on fewer instances but with more hardware?

- Complexity: managing a distributed system across multiple instances increases the complexity of maintaining the Redis cluster.

- Which resources to increase: Redis is an in-memory application but can use extra cores for forked processes and storage for backups.

If scaling up is the preferred option, make sure to investigate the correct instance size to move to.

Review your metrics including:

- Memory usage

- CPU usage

- Disk usage

- Latency

- Throughput

- Network throughput

Use these metrics to decide which component needs to be scaled and try and match to a suitable instance size.

4. Scaling Redis Horizontally

Horizontal scaling means “scaling out” or adding more servers to your Redis clusters.

While Redis can run on a single node, Instaclustr Redis clusters always have a minimum of 3 primary (or master) nodes. We also recommend that for a production cluster you have a replication factor of 1. This means that for each primary node, there is 1 replica node. Because our Redis clusters are set up in cluster mode from the beginning, scaling out is relatively simple. In fact, we automate it all for you; just say how many nodes you would like to add, and we’ll add them.

If you are running your own Redis cluster there are a few things to consider when scaling horizontally:

- Whether your Redis instance is running in cluster mode already. Generally, if there is only 1 Redis server then it would not be running in cluster mode. It is possible to change an existing cluster using cluster mode and cluster meet.

- Both horizontal scaling and vertical scaling have additional cost, so they must be weighed up against each other.

- If you are going from single node Redis to cluster mode, then you may need to change your client connection. If there is code connecting to the Redis cluster, it may need to be configured or modified to keep track of a Redis clustered topology. This includes setting your partitioning strategy—look at the documentation of your client library for more detail.

Understanding your reason for scaling and your use case will go a long way to answering what kind of scaling your Redis cluster will benefit from.

5. Scaling Redis With Instaclustr

As a managed service provider, Instaclustr can manage your Redis scaling for you. Our TechOps engineers can help you understand the best kind of scaling for your circumstance. If you feel confident, you can also take advantage of our automations available for scaling in the console/Terraform/API.

Resize/Vertical Scaling

This can be achieved through the console, API, or Terraform. For the purposes of this blog, we’ll walk through how to do this via the console.

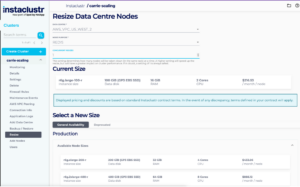

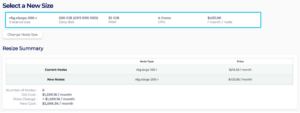

In this example we have a Redis 7.0.12 cluster running with 3 masters and 3 replicas. These nodes are running on a r6g.large-100-r node size. We have decided that we would like to upgrade them to the next Instaclustr node size up, the r6g.xlarge-200-r. The differences between the two node sizes can be seen below.

To scale this cluster vertically, we log into the console and in the left-hand side menu we pick “Resize”.

On the “Resize Data Centre Nodes” page we need to first choose “Concurrent Resize”. To be safe we choose 1, this means that Instaclustr will only Resize 1 node at a time. We select our new node size, review the cost and click Resize Data Centre.

Add Nodes/Horizontal Scaling

This can be achieved through the console, API, or Terraform. For the purposes of this blog, we’ll walk through how to do this via the console.

In this example we have a Redis 7.0.12 cluster running with 3 masters and 3 replicas. These nodes are running on a r6g.large-100-r node size.

6. Cost Considerations

Unlike other aspects of Redis scaling (like understanding metrics and load planning), cost considerations are easy to understand. Cost when scaling can vary, so it’s worth to look into your specific scenario.

In the following example we have a 3 node Redis cluster with no replicas:

Initial Cost:

- Each node costs $216.33 USD per month

- Total cost = 3 nodes x $216.33 USD = $648.99 USD per month

Scaling Horizontally

- Adding 2 nodes at the same size.

- New total cost = 5 nodes x $216.33 USD = $1081.65 USD

Scaling Vertically

- Adding resources to the existing 3 nodes.

- Each larger node now costs $433.06 USD per month

- New total cost = 3 nodes x $433.06 USD = $1299.18

However, we also need to consider the total resources we have added.

Looking at the same scenarios as above:

- 3 nodes each with

- 100 GiB Storage

- 16 GiB RAM

- 2 cores

- Total: 300 GiB Storage, 48 GiB RAM, 6 Cores

- Horizontally, 5 nodes

- 100 GiB Storage

- 16 GiB RAM

- 2 Cores

- Total: 500 GiB Storage, 80 GiB RAM, 10 Cores

- Vertically, 3 larger nodes

- 200 GiB Storage

- 32 GiB RAM

- 4 Cores

- Total: 600 GiB Storage, 96 GiB RAM, 12 Cores

We can see that in this scenario scaling vertically costs us more, but we do end up with more resources. Making sure to not just evaluate cost, but which total infrastructure included in that cost is important.

7. Best Practices for Scaling Redis

Whether to scale horizontally or vertically will ultimately come down to your specific scenario. We recommend understanding the following to manage your scaling needs proactively:

- Redis Metrics: Understand how close to the limits your cluster is.

- Use Case: Is your cluster under load at certain times? Do you have expected growth? Can you change your data model to cope better?

- Cost: Costs for scaling either way will vary.

- Planning: Understanding your expected load against the cluster, not only yesterday but in the future, will allow you to scale before you hit resourcing limits. Being proactive rather than reactive always makes life easier for everyone.

- Complexity: Managing big, distributed data can get complex. This is where Instaclustr’s Managed Platform can manage the complexity of running Redis while you focus on your applications.

Scaling is a critical part of running and optimizing your Redis deployment. Instaclustr can monitor your Redis cluster to help you understand if and when scaling is required. For help doing this check out our open source tool for collecting Redis metrics.

Once it comes time to scale, our easy-to-use platform makes it a breeze to scale vertically or horizontally.

Ready to get started? Contact us to spin up a cluster for free in minutes, or have Instaclustr help you manage your Redis cluster.