k-NN Plugin

What is it

The k-NN Plugin extends OpenSearch by adding support for a vector data type. This allows for a new search which utilises the k-Nearest Neighbors algorithm. That is, providing a vector as a search input, OpenSearch can return a number (k) of nearest vectors in an index. This adds many more use cases for performing categorisation with OpenSearch when the data can be converted to a vector.

How to provision

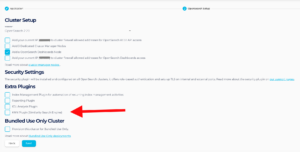

The k-NN can be selected as a plugin. In the console just select the checkbox in the plugin section.

Native Libraries vs Apache Lucene

The k-NN plugin has multiple options for the internal representation of the vectors. The 2.x visions support an Apache Lucene native indexing utilising Lucene 9 features. This is the recommended choice as it is more natural for OpenSearch Architecture. Alternative there are 2 native libraries which build out data structures in memory outside of the JVM. These can provide performance benefits and give more control of the internals at the cost of additional complexity in regard to memory management.

You specify the engine at indexing time. Here is an example for using Apache Lucene as the engine.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

PUT /demo_index { 'settings': { 'index': { 'number_of_shards': 6, "number_of_replicas": 1, "knn": True } }, "mappings": { "properties": { "my_vector": { "type": "knn_vector", "dimension": 64, "method": { "name":"hnsw", "engine":"lucene" } }, "token": { "type" : "keyword" } } } } |

Pointers and Tips

Size Calculation

If choosing to use the native libraries additional thought needs to be given to the size of memory needed for your nodes. Your cluster will be configured with 50% of RAM being allocated to OpenSearch. The memory cache used by nativen libraries takes another 25% of the RAM. So memory is even more important for these clusters. There are formulas for the rough estimate for both the HNSW and IVF approaches (See doco).

HNSW

where M = The number of bidirectional links (See doco)

|

1 |

1.1 * (4 * dimension + 8 * M) * num_vectors. |

IVF

where nlist = Number of buckets to partition vectors into (See doco).

|

1 |

<span style="font-weight: 400;">1.1 * (((4 * dimension) * num_vectors) + (4 * nlist * d))</span><span style="font-weight: 400;"> bytes.</span> |

Stats

The stats call is your friend in giving you insights into the behaviour of your indices with k-NN, if you are using the native library engines. You can see which native libraries are active and how much memory your indexes are using. The memory usage percentage can tell you what amount of the memory cache is used per index. Combine this with shard count on the node to work out the per shard cost.

|

1 |

GET /_plugins/_knn/stats |

Result

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 |

{ "_nodes" : { "total" : 4, "successful" : 4, "failed" : 0 }, "cluster_name" : "rob_os_knn_test", "circuit_breaker_triggered" : false, "model_index_status" : null, "nodes" : { "v_5wuwjBSAuJD2xOUYB8AQ" : { "graph_memory_usage_percentage" : 72.8006, "graph_query_requests" : 0, "graph_memory_usage" : 692440, "cache_capacity_reached" : false, "load_success_count" : 15, "training_memory_usage" : 0, "indices_in_cache" : { "another_demo_knn_index" : { "graph_memory_usage" : 230754, "graph_memory_usage_percentage" : 24.26063, "graph_count" : 5 }, "demo_knn" : { "graph_memory_usage" : 461686, "graph_memory_usage_percentage" : 48.53997, "graph_count" : 10 } }, "script_query_errors" : 0, "hit_count" : 0, "knn_query_requests" : 1, "total_load_time" : 3462148913, "miss_count" : 15, "training_memory_usage_percentage" : 0.0, "graph_index_requests" : 0, "faiss_initialized" : true, "load_exception_count" : 0, "training_errors" : 0, "eviction_count" : 0, "nmslib_initialized" : false, "script_compilations" : 0, "script_query_requests" : 0, "graph_query_errors" : 0, "indexing_from_model_degraded" : false, "graph_index_errors" : 0, "training_requests" : 0, "script_compilation_errors" : 0 }, …… … } } } |

Warming k-NN Indices

If using native libraries, graphs need to be built in memory for a shard before it can return search results. This can cause lag which can be prevented by warming revenant indices. It is important to warm an index before calculating how much memory it will use. It is also important to consider that inactive shards can be removed from memory under a breaker condition or idle setting for the memory cache.

|

1 |

GET /_plugins/_knn/warmup/index1,index2,index3?pretty |

Result

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

{ "_shards" : { "total" : 6, "successful" : 6, "failed" : 0 } } |

Refresh Interval

The index refresh intervals can have a big impact on both indexing and search performance of the k-NN plugin. Queries are per segments so small segments created with low refresh intervals can lead to added search latency. Disabling the refresh entirely will speed up indexing in general. So it is recommended to turn off where possible, such as during a bulk load of indices using the vectors data type.

Turn Off

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

PUT /demo_knn/_settings { "settings": { "index": { "refresh_interval" : "-1" } } } |

Result

|

1 2 3 4 5 |

{ "acknowledged" : true } |

Turn back on for 60 second interval

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

PUT /demo_knn/_settings { "settings": { "index": { "refresh_interval" : "60s" } } } |

Result

|

1 2 3 4 5 |

{ "acknowledged" : true } |

Replicas

Disabling replicas during a bulk load will speed up indexing time. You can then add additional replicas once the load is complete. Each replica adds to the memory cost, so be careful when adding them while using the native libraries as your indexing engine. You can use the warmup and then stats calls mentioned earlier to see if you have enough memory to add replicas.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

PUT /demo_knn/_settings { "settings": { "index": { "number_of_replicas": 2 } } } |

Result

|

1 2 3 4 5 |

<span style="font-weight: 400;">{</span> <span style="font-weight: 400;"> "acknowledged" : true</span> <span style="font-weight: 400;">}</span> |

Further Reading

More documentation on these and other aspects of the k-NN plugin can be found in the OpenSearch projects documentation.